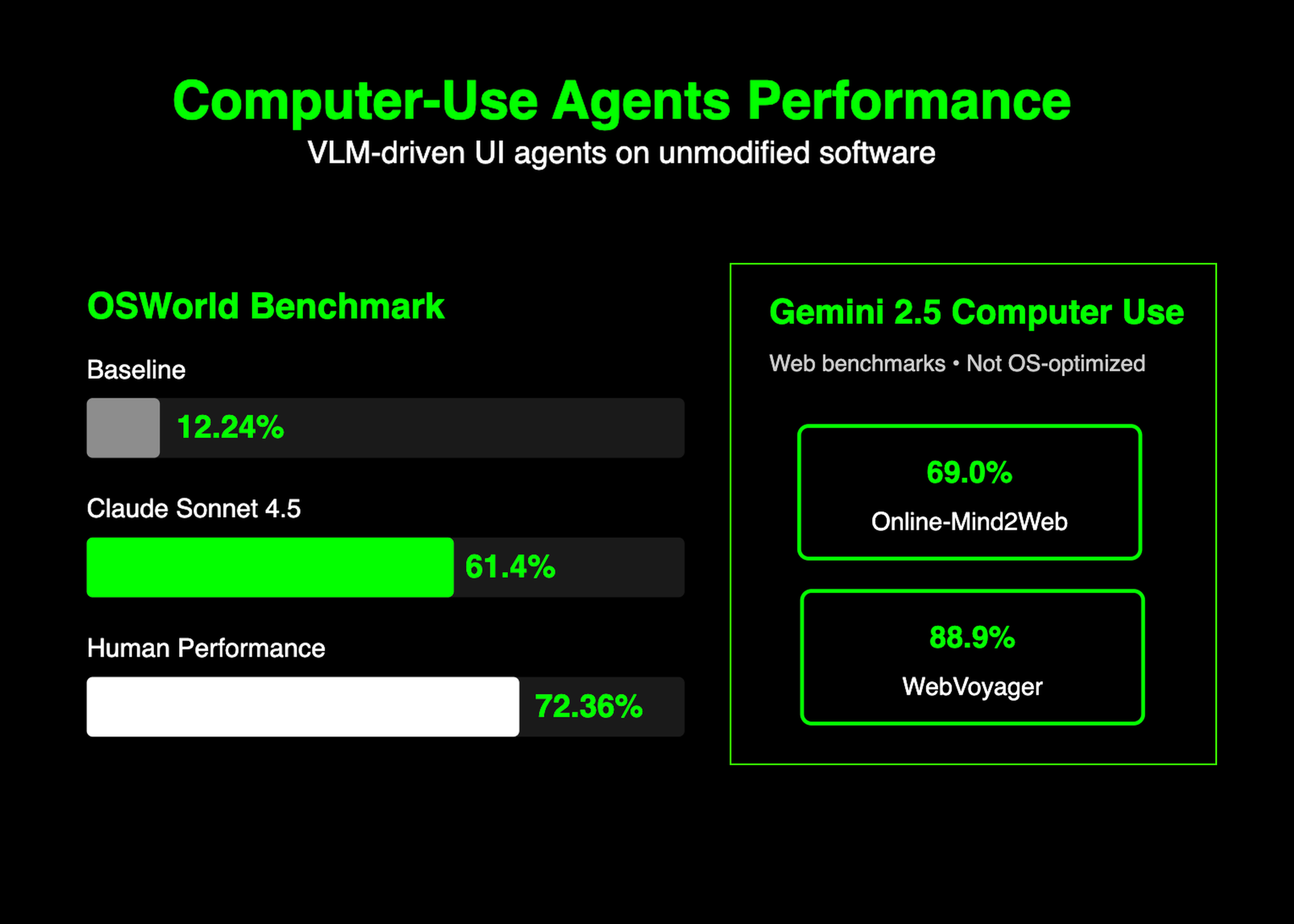

TL;DR: Computer-use agents are VLM-driven UI agents that act like users on unmodified software. Baselines on OSWorld started at 12.24% (human 72.36%); Claude Sonnet 4.5 now reports 61.4%. Gemini 2.5 Computer Use leads several web benchmarks (Online-Mind2Web 69.0%, WebVoyager 88.9%) but is not yet OS-optimized. Next steps center on OS-level robustness, sub-second action loops, and hardened safety policies, with transparent training/evaluation recipes emerging from the open community.

Definition

Computer-use agents (a.k.a. GUI agents) are vision-language models that observe the screen, ground UI elements, and execute bounded UI actions (click, type, scroll, key-combos) to complete tasks in unmodified applications and browsers. Public implementations include Anthropic’s Computer Use, Google’s Gemini 2.5 Computer Use, and OpenAI’s Computer-Using Agent powering Operator.

Control Loop

Typical runtime loop: (1) capture screenshot + state, (2) plan next action with spatial/semantic grounding, (3) act via a constrained action schema, (4) verify and retry on failure. Vendors document standardized action sets and guardrails; audited harnesses normalize comparisons.

Benchmark Landscape

- OSWorld (HKU, Apr 2024): 369 real desktop/web tasks spanning OS file I/O and multi-app workflows. At release, human 72.36%, best model 12.24%.

- State of play (2025): Anthropic Claude Sonnet 4.5 reports 61.4% on OSWorld (sub-human but a large jump from 42.2%).

- Live-web benchmarks: Google’s Gemini 2.5 Computer Use reports 69.0% on Online-Mind2Web (official leaderboard), 88.9% on WebVoyager, 69.7% on AndroidWorld; the current model is browser-optimized and not yet optimized for OS-level control.

- Online-Mind2Web spec: 300 tasks across 136 live websites; results verified by Princeton/HAL and a public HF space.

Architecture Components

- Perception & Grounding: periodic screenshots, OCR/text extraction, element localization, coordinate inference.

- Planning: multi-step policy with recovery; often post-trained/RL-tuned for UI control.

- Action Schema: bounded verbs (

click_at,type,key_combo,open_app), benchmark-specific exclusions to prevent tool shortcuts. - Evaluation Harness: live-web/VM sandboxes with third-party auditing and reproducible execution scripts.

Enterprise Snapshot

- Anthropic: Computer Use API; Sonnet 4.5 at 61.4% OSWorld; docs emphasize pixel-accurate grounding, retries, and safety confirmations.

- Google DeepMind: Gemini 2.5 Computer Use API + model card with Online-Mind2Web 69.0%, WebVoyager 88.9%, AndroidWorld 69.7%, latency measurements, and safety mitigations.

- OpenAI: Operator research preview for U.S. Pro users, powered by a Computer-Using Agent; separate system card and developer surface via the Responses API; availability is limited/preview.

Where They’re Headed: Web → OS

- Few-/one-shot workflow cloning: near-term direction is robust task imitation from a single demonstration (screen capture + narration). Treat as an active research claim, not a fully solved product feature.

- Latency budgets for collaboration: to preserve direct manipulation, actions should land within 0.1–1 s HCI thresholds; current stacks often exceed this due to vision and planning overhead. Expect engineering on incremental vision (diff frames), cache-aware OCR, and action batching.

- OS-level breadth: file dialogs, multi-window focus, non-DOM UIs, and system policies add failure modes absent from browser-only agents. Gemini’s current “browser-optimized, not OS-optimized” status underscores this next step.

- Safety: prompt-injection from web content, dangerous actions, and data exfiltration. Model cards describe allow/deny lists, confirmations, and blocked domains; expect typed action contracts and “consent gates” for irreversible steps.

Practical Build Notes

- Start with a browser-first agent using a documented action schema and a verified harness (e.g., Online-Mind2Web).

- Add recoverability: explicit post-conditions, on-screen verification, and rollback plans for long workflows.

- Treat metrics with skepticism: prefer audited leaderboards or third-party harnesses over self-reported scripts; OSWorld uses execution-based evaluation for reproducibility.

Open Research & Tooling

Hugging Face’s Smol2Operator provides an open post-training recipe that upgrades a small VLM into a GUI-grounded operator—useful for labs/startups prioritizing reproducible training over leaderboard records.

Key Takeaways

- Computer-use (GUI) agents are VLM-driven systems that perceive screens and emit bounded UI actions (click/type/scroll) to operate unmodified apps; current public implementations include Anthropic Computer Use, Google Gemini 2.5 Computer Use, and OpenAI’s Computer-Using Agent.

- OSWorld (HKU) benchmarks 369 real desktop/web tasks with execution-based evaluation; at launch humans achieved 72.36% while the best model reached 12.24%, highlighting grounding and procedural gaps.

- Anthropic Claude Sonnet 4.5 reports 61.4% on OSWorld—sub-human but a large jump from prior Sonnet 4 results.

- Gemini 2.5 Computer Use leads several live-web benchmarks—Online-Mind2Web 69.0%, WebVoyager 88.9%, AndroidWorld 69.7%—and is explicitly optimized for browsers, not yet for OS-level control.

- OpenAI Operator is a research preview powered by the Computer-Using Agent (CUA) model that uses screenshots to interact with GUIs; availability remains limited.

- Open-source trajectory: Hugging Face’s Smol2Operator provides a reproducible post-training pipeline that turns a small VLM into a GUI-grounded operator, standardizing action schemas and datasets.

References:

Benchmarks (OSWorld & Online-Mind2Web)

- OSWorld homepage: https://os-world.github.io/

- OSWorld paper (arXiv): https://arxiv.org/abs/2404.07972

- OSWorld NeurIPS paper PDF: https://proceedings.neurips.cc/paper_files/paper/2024/file/5d413e48f84dc61244b6be550f1cd8f5-Paper-Datasets_and_Benchmarks_Track.pdf

- Online-Mind2Web (HAL leaderboard): https://hal.cs.princeton.edu/online_mind2web

- Online-Mind2Web (HF leaderboard): https://huggingface.co/spaces/osunlp/Online_Mind2Web_Leaderboard

- Online-Mind2Web (arXiv): https://arxiv.org/abs/2504.01382

- Online-Mind2Web GitHub: https://github.com/OSU-NLP-Group/Online-Mind2Web

Anthropic (Computer Use & Sonnet 4.5)

- Introducing Computer Use (Oct 2024): https://www.anthropic.com/news/3-5-models-and-computer-use

- Developing a Computer Use Model: https://www.anthropic.com/news/developing-computer-use

- Introducing Claude Sonnet 4.5 (benchmarks incl. OSWorld 61.4%): https://www.anthropic.com/news/claude-sonnet-4-5

- Claude Sonnet 4.5 System Card: https://www.anthropic.com/claude-sonnet-4-5-system-card

Google DeepMind (Gemini 2.5 Computer Use)

- Launch blog: https://blog.google/technology/google-deepmind/gemini-computer-use-model/

- Model card (PDF): https://storage.googleapis.com/deepmind-media/Model-Cards/Gemini-2-5-Computer-Use-Model-Card.pdf

- Evaluation & methodology addendum (PDF): https://storage.googleapis.com/deepmind-media/gemini/computer_use_eval_additional_info.pdf

- Gemini API docs — Computer Use: https://ai.google.dev/gemini-api/docs/computer-use

- Vertex AI docs — Computer Use: https://cloud.google.com/vertex-ai/generative-ai/docs/computer-use

OpenAI (Operator / CUA)

- Computer-Using Agent overview: https://openai.com/index/computer-using-agent/

- Operator system card: https://openai.com/index/operator-system-card/

- Introducing Operator (research preview): https://openai.com/index/introducing-operator/

Open-source: Hugging Face Smol2Operator

- Smol2Operator blog: https://huggingface.co/blog/smol2operator

- Smol2Operator repo: https://github.com/huggingface/smol2operator

- Smol2Operator demo Space: https://huggingface.co/spaces/A-Mahla/Smol2Operator

The post What are ‘Computer-Use Agents’? From Web to OS—A Technical Explainer appeared first on MarkTechPost.