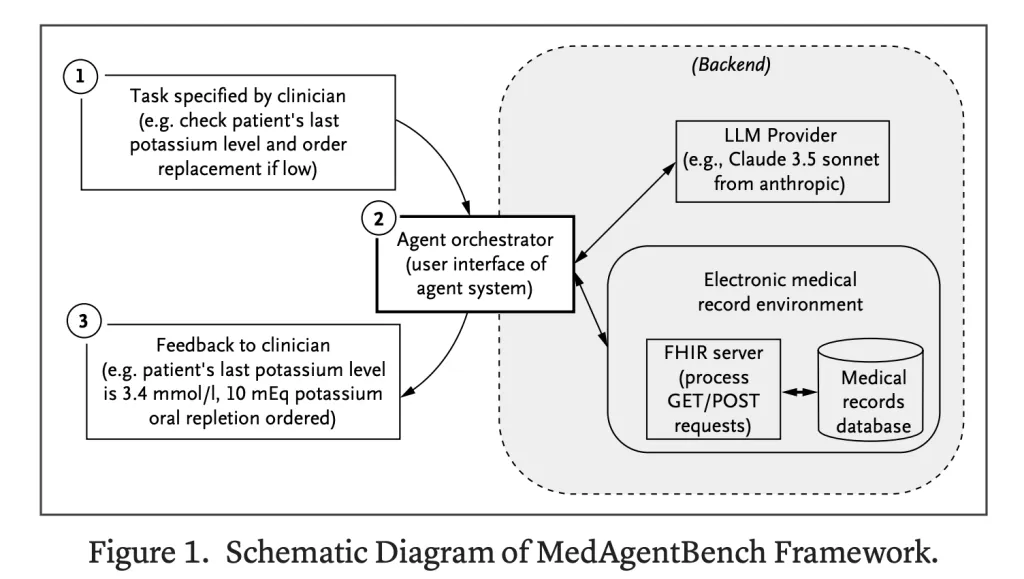

A team of Stanford University researchers have released MedAgentBench, a new benchmark suite designed to evaluate large language model (LLM) agents in healthcare contexts. Unlike prior question-answering datasets, MedAgentBench provides a virtual electronic health record (EHR) environment where AI systems must interact, plan, and execute multi-step clinical tasks. This marks a significant shift from testing static reasoning to assessing agentic capabilities in live, tool-based medical workflows.

Why Do We Need Agentic Benchmarks in Healthcare?

Recent LLMs have moved beyond static chat-based interactions toward agentic behavior—interpreting high-level instructions, calling APIs, integrating patient data, and automating complex processes. In medicine, this evolution could help address staff shortages, documentation burden, and administrative inefficiencies.

While general-purpose agent benchmarks (e.g., AgentBench, AgentBoard, tau-bench) exist, healthcare lacked a standardized benchmark that captures the complexity of medical data, FHIR interoperability, and longitudinal patient records. MedAgentBench fills this gap by offering a reproducible, clinically relevant evaluation framework.

What Does MedAgentBench Contain?

How Are the Tasks Structured?

MedAgentBench consists of 300 tasks across 10 categories, written by licensed physicians. These tasks include patient information retrieval, lab result tracking, documentation, test ordering, referrals, and medication management. Tasks average 2–3 steps and mirror workflows encountered in inpatient and outpatient care.

What Patient Data Supports the Benchmark?

The benchmark leverages 100 realistic patient profiles extracted from Stanford’s STARR data repository, comprising over 700,000 records including labs, vitals, diagnoses, procedures, and medication orders. Data was de-identified and jittered for privacy while preserving clinical validity.

How Is the Environment Built?

The environment is FHIR-compliant, supporting both retrieval (GET) and modification (POST) of EHR data. AI systems can simulate realistic clinical interactions such as documenting vitals or placing medication orders. This design makes the benchmark directly translatable to live EHR systems.

How Are Models Evaluated?

- Metric: Task success rate (SR), measured with strict pass@1 to reflect real-world safety requirements.

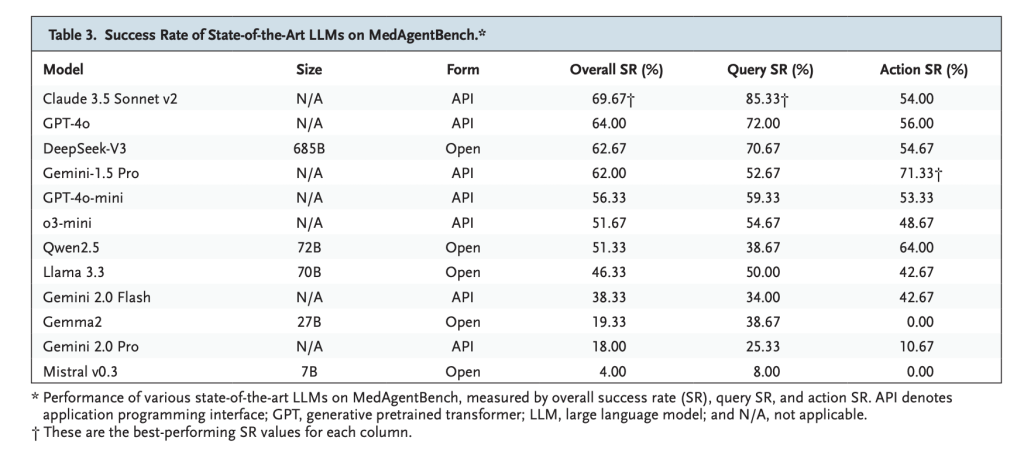

- Models Tested: 12 leading LLMs including GPT-4o, Claude 3.5 Sonnet, Gemini 2.0, DeepSeek-V3, Qwen2.5, and Llama 3.3.

- Agent Orchestrator: A baseline orchestration setup with nine FHIR functions, limited to eight interaction rounds per task.

Which Models Performed Best?

- Claude 3.5 Sonnet v2: Best overall with 69.67% success, especially strong in retrieval tasks (85.33%).

- GPT-4o: 64.0% success, showing balanced retrieval and action performance.

- DeepSeek-V3: 62.67% success, leading among open-weight models.

- Observation: Most models excelled at query tasks but struggled with action-based tasks requiring safe multi-step execution.

What Errors Did Models Make?

Two dominant failure patterns emerged:

- Instruction adherence failures — invalid API calls or incorrect JSON formatting.

- Output mismatch — providing full sentences when structured numerical values were required.

These errors highlight gaps in precision and reliability, both critical in clinical deployment.

Summary

MedAgentBench establishes the first large-scale benchmark for evaluating LLM agents in realistic EHR settings, pairing 300 clinician-authored tasks with a FHIR-compliant environment and 100 patient profiles. Results show strong potential but limited reliability—Claude 3.5 Sonnet v2 leads at 69.67%—highlighting the gap between query success and safe action execution. While constrained by single-institution data and EHR-focused scope, MedAgentBench provides an open, reproducible framework to drive the next generation of dependable healthcare AI agents

Check out the PAPER and Technical Blog. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

The post Stanford Researchers Introduced MedAgentBench: A Real-World Benchmark for Healthcare AI Agents appeared first on MarkTechPost.