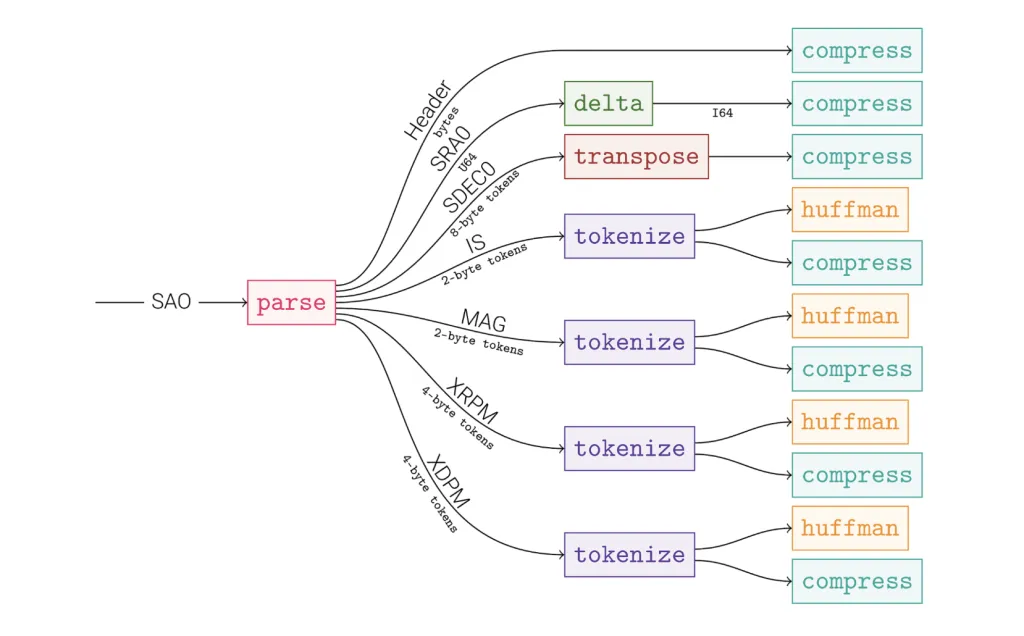

How much compression ratio and throughput would you recover by training a format-aware graph compressor and shipping only a self-describing graph to a universal decoder? Meta AI released OpenZL, an open-source framework that builds specialized, format-aware compressors from high-level data descriptions and emits a self-describing wire format that a universal decoder can read—decoupling compressor evolution from reader rollouts. The approach is grounded in a graph model of compression that represents pipelines as directed acyclic graphs (DAGs) of modular codecs.

So, What’s new?

OpenZL formalizes compression as a computational graph: nodes are codecs/graphs, edges are typed message streams, and the finalized graph is serialized with the payload. Any frame produced by any OpenZL compressor can be decompressed by the universal decoder, because the graph specification travels with the data. This design aims to combine the ratio/throughput benefits of domain-specific codecs with the operational simplicity of a single, stable decoder binary.

How does it work?

- Describe data → build a graph. Developers supply a data description; OpenZL composes parse/group/transform/entropy stages into a DAG tailored to that structure. The result is a self-describing frame: compressed bytes plus the graph spec.

- Universal decode path. Decoding procedurally follows the embedded graph, removing the need to ship new readers when compressors evolve.

Tooling and APIs

- SDDL (Simple Data Description Language): Built-in components and APIs let you decompose inputs into typed streams from a pre-compiled data description; available in C and Python surfaces under

openzl.ext.graphs.SDDL. - Language bindings: Core library and bindings are open-sourced; the repo documents C/C++ and Python usage, and the ecosystem is already adding community bindings (e.g., Rust

openzl-sys).

How does it Perform?

The research team reports that OpenZL achieves superior compression ratios and speeds versus state-of-the-art general-purpose codecs across a variety of real-world datasets. It also notes internal deployments at Meta with consistent size and/or speed improvements and shorter compressor development timelines. The public materials do not assign a single universal numeric factor; results are presented as Pareto improvements dependent on data and pipeline configuration.

Editorial Comments

OpenZL makes format-aware compression operationally practical: compressors are expressed as DAGs, embedded as a self-describing graph in each frame, and decoded by a universal decoder, eliminating reader rollouts. Overall, OpenZL encodes a codec DAG in each frame and decodes via a universal reader; Meta reports Pareto gains over zstd/xz on real datasets.

Check out the Paper, GitHub Page and Technical details. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

The post Meta AI Open-Sources OpenZL: A Format-Aware Compression Framework with a Universal Decoder appeared first on MarkTechPost.