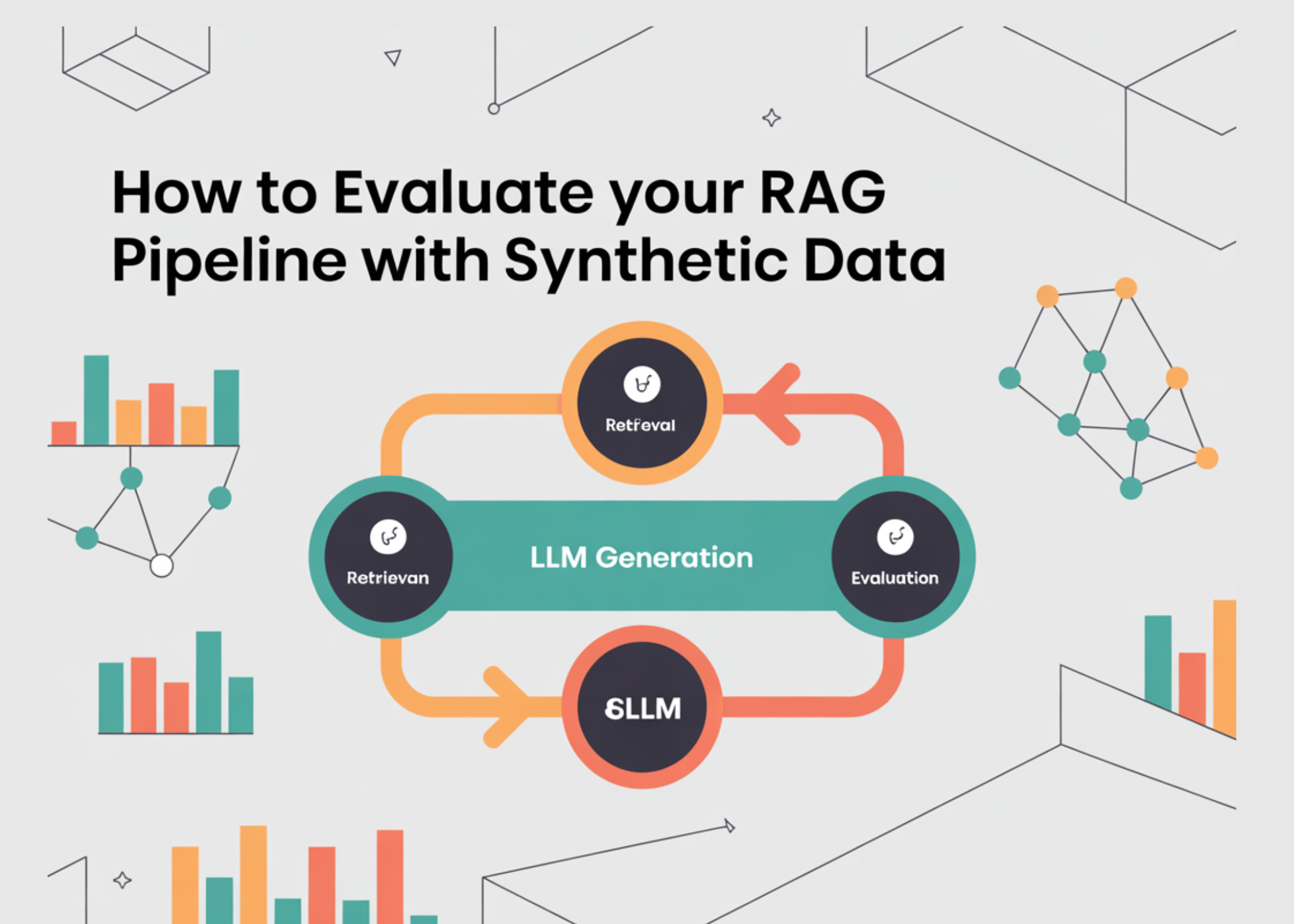

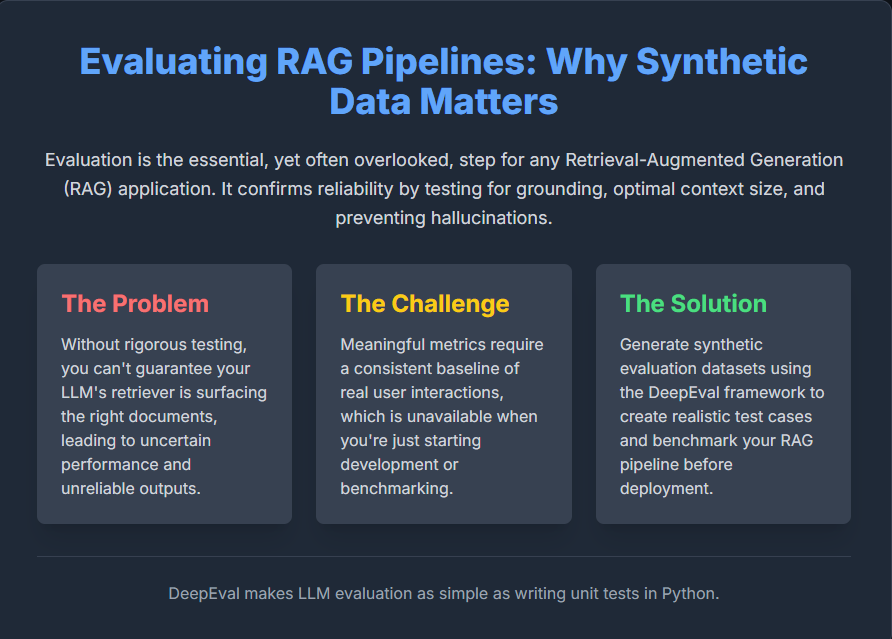

Evaluating LLM applications, particularly those using RAG (Retrieval-Augmented Generation), is crucial but often neglected. Without proper evaluation, it’s almost impossible to confirm if your system’s retriever is effective, if the LLM’s answers are grounded in the sources (or hallucinating), and if the context size is optimal.

Since initial testing lacks the necessary real user data for a baseline, a practical solution is synthetic evaluation datasets. This article will show you how to generate these realistic test cases using DeepEval, an open-source framework that simplifies LLM evaluation, allowing you to benchmark your RAG pipeline before it goes live. Check out the FULL CODES here.

Installing the dependencies

!pip install deepeval chromadb tiktoken pandasOpenAI API Key

Since DeepEval leverages external language models to perform its detailed evaluation metrics, an OpenAI API key is required for this tutorial to run.

- Navigate to the OpenAI API Key Management page and generate a new key.

- If you are new to the OpenAI platform, you may need to add billing details and make a small minimum payment (typically $5) to fully activate your API access.

Defining the text

In this step, we’re manually creating a text variable that will act as our source document for generating synthetic evaluation data.

This text combines diverse factual content across multiple domains — including biology, physics, history, space exploration, environmental science, medicine, computing, and ancient civilizations — to ensure the LLM has rich and varied material to work with.

DeepEval’s Synthesizer will later:

- Split this text into semantically coherent chunks,

- Select meaningful contexts suitable for generating questions, and

- Produce synthetic “golden” pairs — (input, expected_output) — that simulate real user queries and ideal LLM responses.

After defining the text variable, we save it as a .txt file so that DeepEval can read and process it later. You can use any other text document of your choice — such as a Wikipedia article, research summary, or technical blog post — as long as it contains informative and well-structured content. Check out the FULL CODES here.

text = """

Crows are among the smartest birds, capable of using tools and recognizing human faces even after years.

In contrast, the archerfish displays remarkable precision, shooting jets of water to knock insects off branches.

Meanwhile, in the world of physics, superconductors can carry electric current with zero resistance -- a phenomenon

discovered over a century ago but still unlocking new technologies like quantum computers today.

Moving to history, the Library of Alexandria was once the largest center of learning, but much of its collection was

lost in fires and wars, becoming a symbol of human curiosity and fragility. In space exploration, the Voyager 1 probe,

launched in 1977, has now left the solar system, carrying a golden record that captures sounds and images of Earth.

Closer to home, the Amazon rainforest produces roughly 20% of the world's oxygen, while coral reefs -- often called the

"rainforests of the sea" -- support nearly 25% of all marine life despite covering less than 1% of the ocean floor.

In medicine, MRI scanners use strong magnetic fields and radio waves

to generate detailed images of organs without harmful radiation.

In computing, Moore's Law observed that the number of transistors

on microchips doubles roughly every two years, though recent advances

in AI chips have shifted that trend.

The Mariana Trench is the deepest part of Earth's oceans,

reaching nearly 11,000 meters below sea level, deeper than Mount Everest is tall.

Ancient civilizations like the Sumerians and Egyptians invented

mathematical systems thousands of years before modern algebra emerged.

"""with open("example.txt", "w") as f:

f.write(text)Generating Synthetic Evaluation Data

In this code, we use the Synthesizer class from the DeepEval library to automatically generate synthetic evaluation data — also called goldens — from an existing document. The model “gpt-4.1-nano” is selected for its lightweight nature. We provide the path to our document (example.txt), which contains factual and descriptive content across diverse topics like physics, ecology, and computing. The synthesizer processes this text to create meaningful question–answer pairs (goldens) that can later be used to test and benchmark LLM performance on comprehension or retrieval tasks.

The script successfully generates up to six synthetic goldens. The generated examples are quite rich — for instance, one input asks to “Evaluate the cognitive abilities of corvids in facial recognition tasks,” while another explores “Amazon’s oxygen contribution and its role in ecosystems.” Each output includes a coherent expected answer and contextual snippets derived directly from the document, demonstrating how DeepEval can automatically produce high-quality synthetic datasets for LLM evaluation. Check out the FULL CODES here.

from deepeval.synthesizer import Synthesizer

synthesizer = Synthesizer(model="gpt-4.1-nano")

# Generate synthetic goldens from your document

synthesizer.generate_goldens_from_docs(

document_paths=["example.txt"],

include_expected_output=True

)

# Print generated results

for golden in synthesizer.synthetic_goldens[:3]:

print(golden, "n")Using EvolutionConfig to Control Input Complexity

In this step, we configure the EvolutionConfig to influence how the DeepEval synthesizer generates more complex and diverse inputs. By assigning weights to different evolution types — such as REASONING, MULTICONTEXT, COMPARATIVE, HYPOTHETICAL, and IN_BREADTH — we guide the model to create questions that vary in reasoning style, context usage, and depth.

The num_evolutions parameter specifies how many evolution strategies will be applied to each text chunk, allowing multiple perspectives to be synthesized from the same source material. This approach helps generate richer evaluation datasets that test an LLM’s ability to handle nuanced and multi-faceted queries.

The output demonstrates how this configuration affects the generated goldens. For instance, one input asks about crows’ tool use and facial recognition, prompting the LLM to produce a detailed answer covering problem-solving and adaptive behavior. Another input compares Voyager 1’s golden record with the Library of Alexandria, requiring reasoning across multiple contexts and historical significance.

Each golden includes the original context, applied evolution types (e.g., Hypothetical, In-Breadth, Reasoning), and a synthetic quality score. Even with a single document, this evolution-based approach creates diverse, high-quality synthetic evaluation examples for testing LLM performance. Check out the FULL CODES here.

from deepeval.synthesizer.config import EvolutionConfig, Evolution

evolution_config = EvolutionConfig(

evolutions={

Evolution.REASONING: 1/5,

Evolution.MULTICONTEXT: 1/5,

Evolution.COMPARATIVE: 1/5,

Evolution.HYPOTHETICAL: 1/5,

Evolution.IN_BREADTH: 1/5,

},

num_evolutions=3

)

synthesizer = Synthesizer(evolution_config=evolution_config)

synthesizer.generate_goldens_from_docs(["example.txt"])This ability to generate high-quality, complex synthetic data is how we bypass the initial hurdle of lacking real user interactions. By leveraging DeepEval’s Synthesizer—especially when guided by the EvolutionConfig—we move far beyond simple question-and-answer pairs.

The framework allows us to create rigorous test cases that probe the RAG system’s limits, covering everything from multi-context comparisons and hypothetical scenarios to complex reasoning.

This rich, custom-built dataset provides a consistent and diverse baseline for benchmarking, allowing you to continuously iterate on your retrieval and generation components, build confidence in your RAG pipeline’s grounding capabilities, and ensure it delivers reliable performance long before it ever handles its first live query. Check out the FULL CODES here.

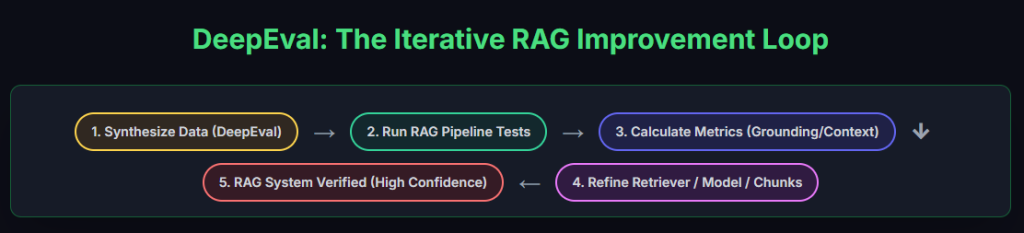

The above Iterative RAG Improvement Loop uses DeepEval’s synthetic data to establish a continuous, rigorous testing cycle for your pipeline. By calculating essential metrics like Grounding and Context, you gain the necessary feedback to iteratively refine your retriever and model components. This systematic process ensures you achieve a verified, high-confidence RAG system that maintains reliability before deployment.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

The post How to Evaluate Your RAG Pipeline with Synthetic Data? appeared first on MarkTechPost.