ByteDance Seed recently dropped a research that might change how we build reasoning AI. For years, devs and AI researchers have struggled to ‘cold-start’ Large Language Models (LLMs) into Long Chain-of-Thought (Long CoT) models. Most models lose their way or fail to transfer patterns during multi-step reasoning.

The ByteDance team discovered the problem: we have been looking at reasoning the wrong way. Instead of just words or nodes, effective AI reasoning has a stable, molecular-like structure.

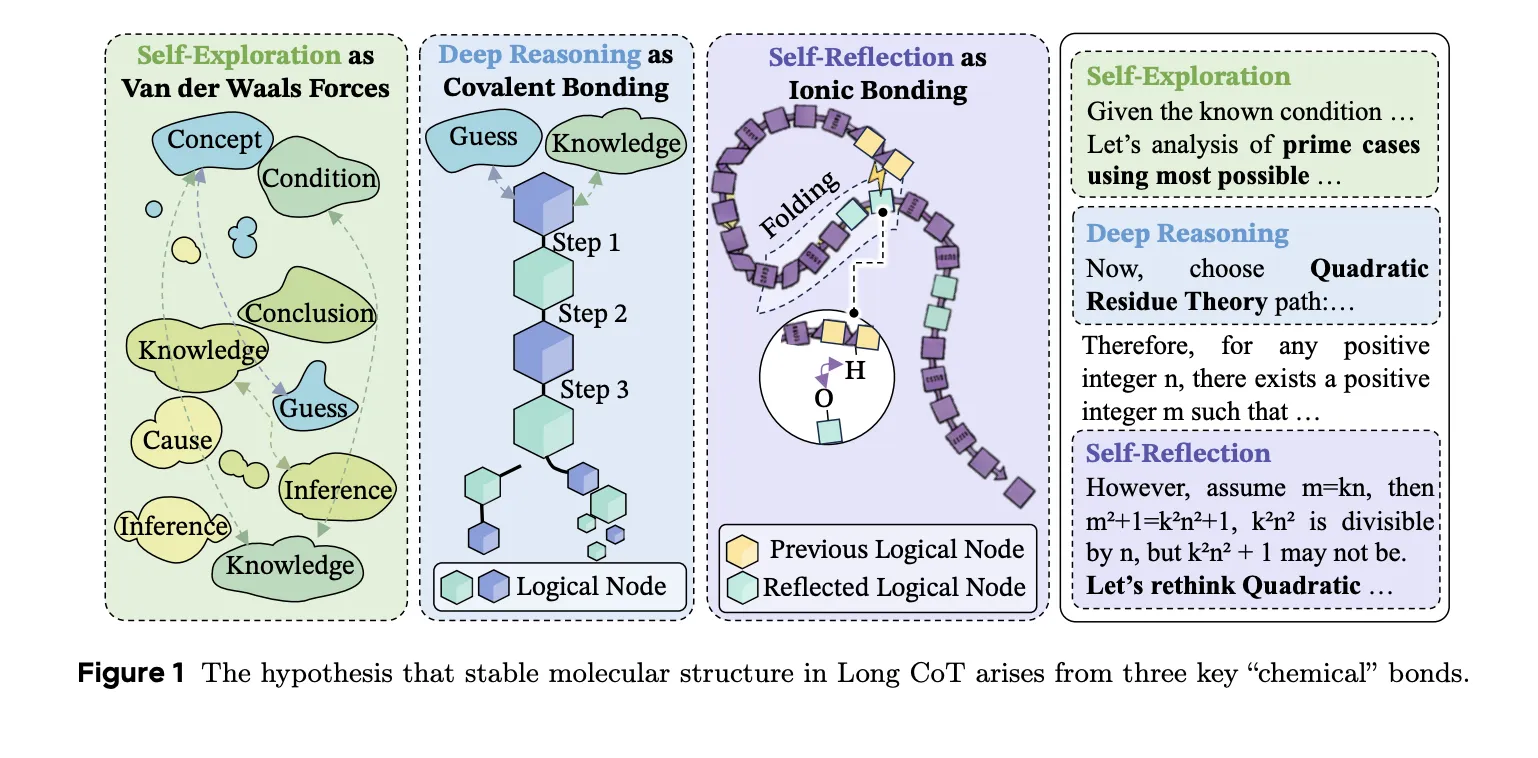

The 3 ‘Chemical Bonds’ of Thought

The researchers posit that high-quality reasoning trajectories are held together by 3 interaction types. These mirror the forces found in organic chemistry:

- Deep Reasoning as Covalent Bonds: This forms the primary ‘bone’ of the thought process. It encodes strong logical dependencies where Step A must justify Step B. Breaking this bond destabilizes the entire answer.

- Self-Reflection as Hydrogen Bonds: This acts as a stabilizer. Just as proteins gain stability when chains fold, reasoning stabilizes when later steps (like Step 100) revise or reinforce earlier premises (like Step 10). In their tests, 81.72% of reflection steps successfully reconnected to previously formed clusters.

- Self-Exploration as Van der Waals Forces: These are weak bridges between distant clusters of logic. They allow the model to probe new possibilities or alternative hypotheses before enforcing stronger logical constraints.

Why ‘Wait, Let Me Think’ Isn’t Enough

Most AI devs/researchers try to fix reasoning by training models to imitate keywords like ‘wait’ or ‘maybe’. ByteDance team proved that models actually learn the underlying reasoning behavior, not the surface words.

The research team identifies a phenomenon called Semantic Isomers. These are reasoning chains that solve the same task and use the same concepts but differ in how their logical ‘bonds’ are distributed.

Key findings include:

- Imitation Fails: Fine-tuning on human-annotated traces or using In-Context Learning (ICL) from weak models fails to build stable Long CoT structures.

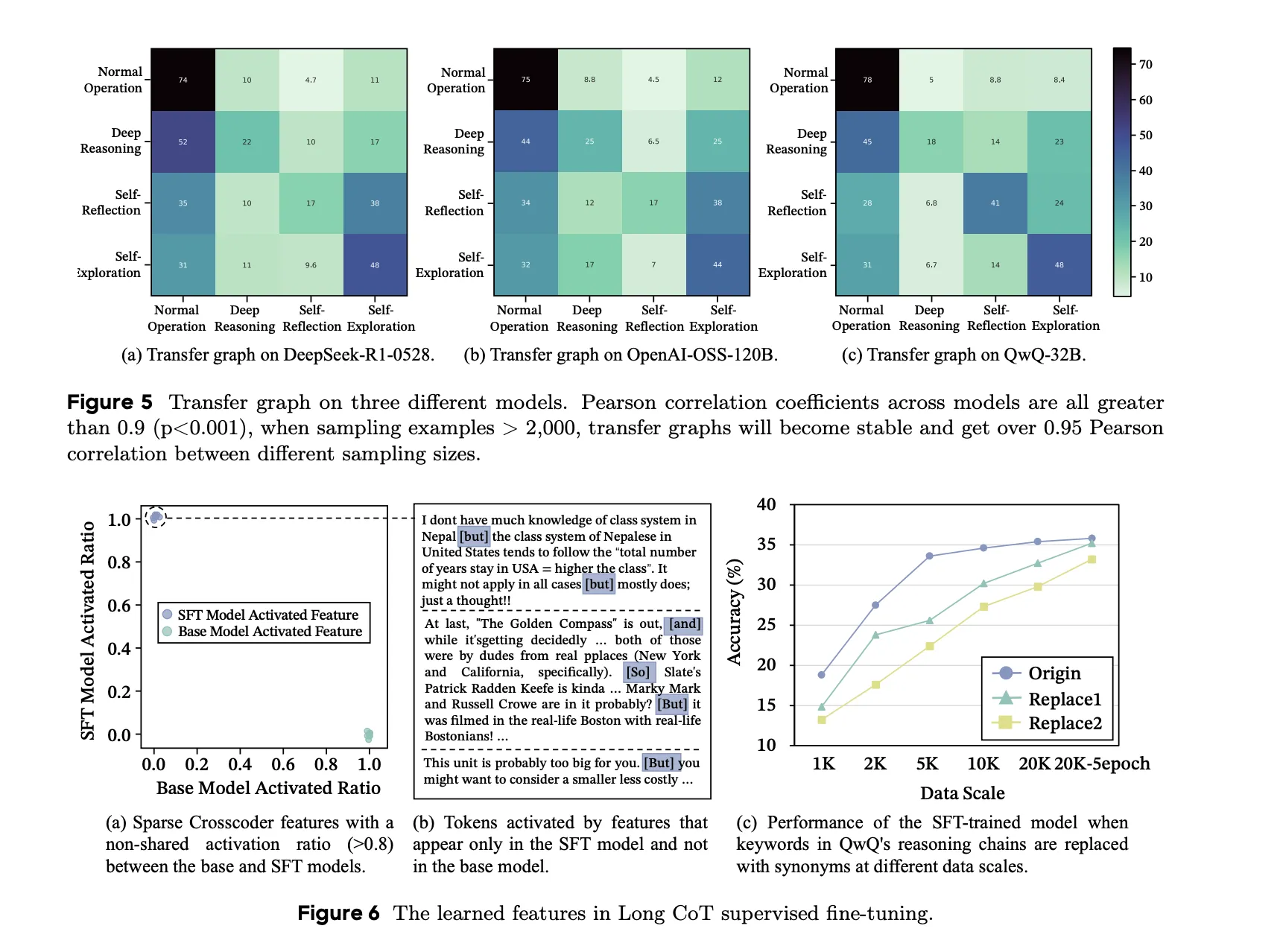

- Structural Conflict: Mixing reasoning data from different strong teachers (like DeepSeek-R1 and OpenAI-OSS) actually destabilizes the model. Even if the data is similar, the different “molecular” structures cause structural chaos and drop performance.

- Information Flow: Unlike humans, who have uniform information gain, strong reasoning models exhibit metacognitive oscillation. They alternate between high-entropy exploration and stable convergent validation.

MOLE-SYN: The Synthesis Method

To fix these issues, ByteDance team introduced MOLE-SYN. This is a ‘distribution-transfer-graph’ method. Instead of directly copying a teacher’s text, it transfers the behavioral structure to the student model.

It works by estimating a behavior transition graph from strong models and guiding a cheaper model to synthesize its own effective Long CoT structures. This decoupling of structure from surface text yields consistent gains across 6 major benchmarks, including GSM8K, MATH-500, and OlymBench.

Protecting the ‘Thought Molecule‘

This research also sheds light on how private AI companies protect their models. Exposing full reasoning traces allows others to clone the model’s internal procedures.

ByteDance team found that summarization and reasoning compression are effective defenses. By reducing the token count—often by more than 45%—companies disrupt the reasoning bond distributions. This creates a gap between what the model outputs and its internal ‘error-bounded transitions,’ making it much harder to distill the model’s capabilities.

Key Takeaways

- Reasoning as ‘Molecular’ Bonds: Effective Long Chain-of-Thought (Long CoT) is defined by three specific ‘chemical’ bonds: Deep Reasoning (covalent-like) forms the logical backbone, Self-Reflection (hydrogen-bond-like) provides global stability through logical folding, and Self-Exploration (van der Waals-like) bridges distant semantic concepts.

- Behavior Over Keywords: Models internalize underlying reasoning structures and transition distributions rather than just surface-level lexical cues like ‘wait’ or ‘maybe’. Replacing keywords with synonyms does not significantly impact performance, proving that true reasoning depth comes from learned behavioral motifs.

- The ‘Semantic Isomer’ Conflict: Combining heterogeneous reasoning data from different strong models (e.g., DeepSeek-R1 and OpenAI-OSS) can trigger ‘structural chaos’. Even if data sources are statistically similar, incompatible behavioral distributions can break logical coherence and degrade model performance.

- MOLE-SYN Methodology: This ‘distribution-transfer-graph’ framework enables models to synthesize effective Long CoT structures from scratch using cheaper instruction LLMs. By transferring the behavioral transition graph instead of direct text, MOLE-SYN achieves performance close to expensive distillation while stabilizing Reinforcement Learning (RL).

- Protection via Structural Disruption: Private LLMs can protect their internal reasoning processes through summarization and compression. Reducing token count by roughly 45% or more effectively ‘breaks’ the bond distributions, making it significantly harder for unauthorized models to clone internal reasoning procedures via distillation.

Check out the Paper. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

The post Forget Keyword Imitation: ByteDance AI Maps Molecular Bonds in AI Reasoning to Stabilize Long Chain-of-Thought Performance and Reinforcement Learning (RL) Training appeared first on MarkTechPost.