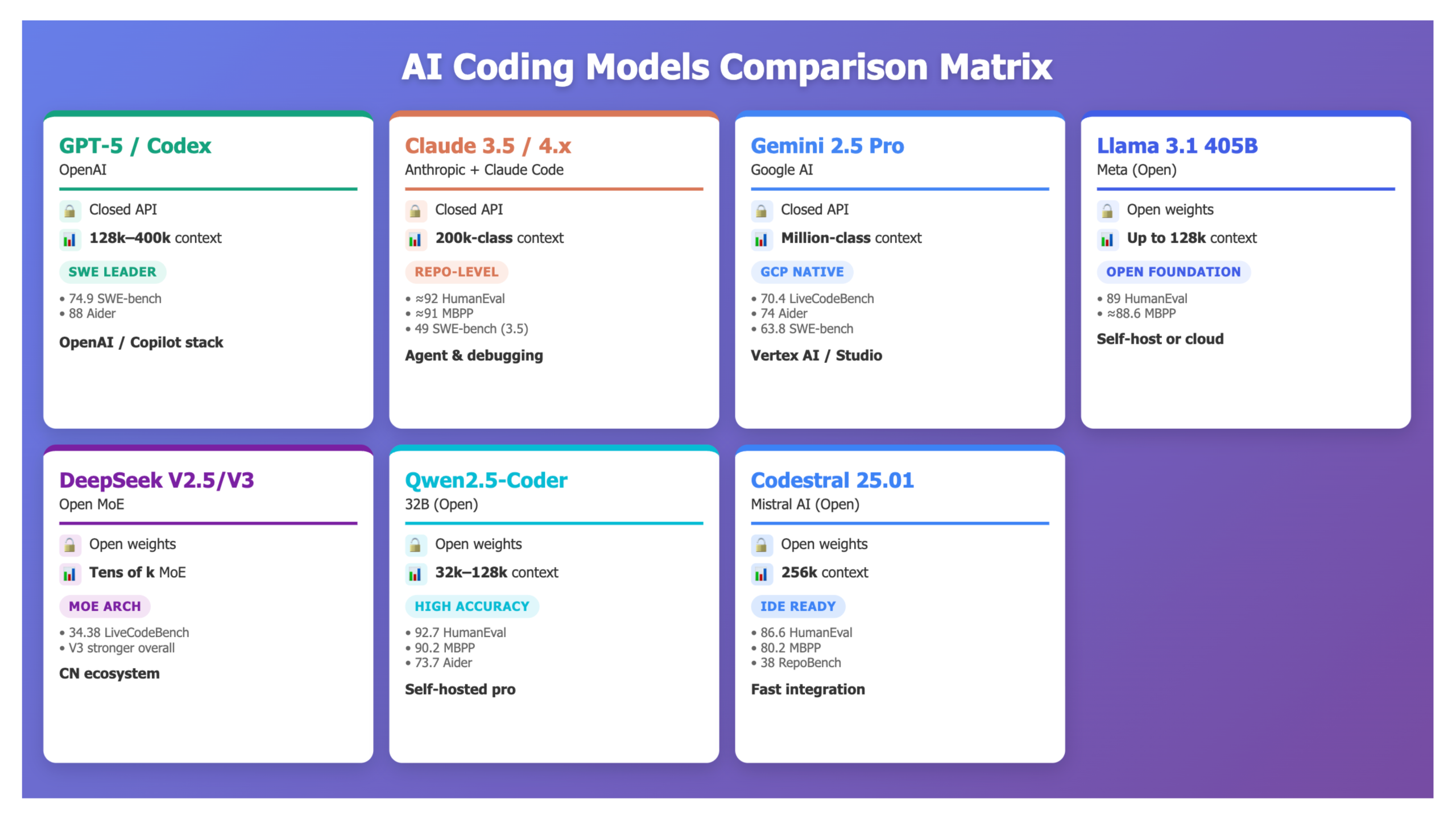

Code-oriented large language models moved from autocomplete to software engineering systems. In 2025, leading models must fix real GitHub issues, refactor multi-repo backends, write tests, and run as agents over long context windows. The main question for teams is not “can it code” but which model fits which constraints.

Here are seven models (and systems around them) that cover most real coding workloads today:

- OpenAI GPT-5 / GPT-5-Codex

- Anthropic Claude 3.5 Sonnet / Claude 4.x Sonnet with Claude Code

- Google Gemini 2.5 Pro

- Meta Llama 3.1 405B Instruct

- DeepSeek-V2.5-1210 (with DeepSeek-V3 as the successor)

- Alibaba Qwen2.5-Coder-32B-Instruct

- Mistral Codestral 25.01

The goal of this comparison is not to rank them on a single score. The goal is to show which system to pick for a given benchmark target, deployment model, governance requirement, and IDE or agent stack.

Evaluation dimensions

We compare on six stable dimensions:

- Core coding quality: HumanEval, MBPP / MBPP EvalPlus, code generation and repair quality on standard Python tasks.

- Repo and bug-fix performance: SWE-bench Verified (real GitHub issues), Aider Polyglot (whole-file edits), RepoBench, LiveCodeBench.

- Context and long-context behavior: Documented context limits and practical behavior in long sessions.

- Deployment model: Closed API, cloud service, containers, on-premises or fully self-hosted open weights.

- Tooling and ecosystem: Native agents, IDE extensions, cloud integration, GitHub and CI/CD support.

- Cost and scaling pattern: Token pricing for closed models, hardware footprint and inference pattern for open models.

1. OpenAI GPT-5 / GPT-5-Codex

OpenAI’s GPT-5 is the flagship reasoning and coding model and the default in ChatGPT. For real-world code, OpenAI reports:

- SWE-bench Verified: 74.9%

- Aider Polyglot: 88%

Both benchmarks simulate real engineering: SWE-bench Verified runs against upstream repos and tests; Aider Polyglot measures whole-file multi-language edits.

Context and variants

- gpt-5 (chat) API: 128k token context.

- gpt-5-pro / gpt-5-codex: up to 400k combined context in the model card, with typical production limits around ≈272k input + 128k output for reliability.

GPT-5 and GPT-5-Codex are available in ChatGPT (Plus / Pro / Team / Enterprise) and via the OpenAI API; they are closed-weight, cloud-hosted only.

Strengths

- Highest published SWE-bench Verified and Aider Polyglot scores among widely available models.

- Very strong at multi-step bug fixing with “thinking” (chain-of-thought) enabled.

- Deep ecosystem: ChatGPT, Copilot, and many third-party IDE and agent platforms use GPT-5 backends.

Limits

- No self-hosting; all traffic must go through OpenAI or partners.

- Long-context calls are expensive if you stream full monorepos, so you need retrieval and diff-only patterns.

Use when you want maximum repo-level benchmark performance and are comfortable with a closed, cloud API.

2. Anthropic Claude 3.5 Sonnet / Claude 4.x + Claude Code

Claude 3.5 Sonnet was Anthropic’s main coding workhorse before the Claude 4 line. Anthropic highlights it as SOTA on HumanEval, and independent comparisons report:

- HumanEval: ≈ 92%

- MBPP EvalPlus: ≈ 91%

In 2025, Anthropic released Claude 4 Opus, Sonnet, and Sonnet 4.5, positioning Sonnet 4.5 as its best coding and agent model so far.

Claude Code stack

Claude Code is a repo-aware coding system:

- Managed VM connected to your GitHub repo.

- File browsing, editing, tests, and PR creation.

- SDK for building custom agents that use Claude as a coding backend.

Strengths

- Very strong HumanEval / MBPP, good empirical behavior on debugging and code review.

- Production-grade coding agent environment with persistent VM and GitHub workflows.

Limits

- Closed and cloud-hosted, similar to GPT-5 in governance terms.

- Published SWE-bench Verified numbers for Claude 3.5 Sonnet are below GPT-5, though Claude 4.x is likely closer.

Use when you need explainable debugging, code review, and a managed repo-level agent and can accept a closed deployment.

3. Google Gemini 2.5 Pro

Gemini 2.5 Pro is Google DeepMind’s main coding and reasoning model for developers. It reports following performance/results:

- LiveCodeBench v5: 70.4%

- Aider Polyglot (whole-file editing): 74.0%

- SWE-bench Verified: 63.8%

These results place Gemini 2.5 Pro above many earlier models and only behind Claude 3.7 and GPT-5 on SWE-bench Verified.

Context and platform

- Long-context capability marketed up to 1M tokens across the Gemini family; 2.5 Pro is the stable tier used in Gemini Apps, Google AI Studio, and Vertex AI.

- Tight integration with GCP services, BigQuery, Cloud Run, and Google Workspace.

Strengths

- Good combination of LiveCodeBench, Aider, SWE-bench scores plus first-class GCP integration.

- Strong choice for “data plus application code” when you want the same model for SQL, analytics helpers, and backend code.

Limits

- Closed and tied to Google Cloud.

- For pure SWE-bench Verified, GPT-5 and the newest Claude Sonnet 4.x are stronger.

Use when your workloads already run on GCP / Vertex AI and you want a long-context coding model inside that stack.

4. Meta Llama 3.1 405B Instruct

Meta’s Llama 3.1 family (8B, 70B, 405B) is open-weight. The 405B Instruct variant is the high-end option for coding and general reasoning. It reports following performance/results:

- HumanEval (Python): 89.0

- MBPP (base or EvalPlus): ≈ 88.6

These scores put Llama 3.1 405B among the strongest open models on classic code benchmarks.

The official model card states that Llama 3.1 models outperform many open and closed chat models on common benchmarks and are optimized for multilingual dialogue and reasoning.

Strengths

- High HumanEval / MBPP scores with open weights and permissive licensing.

- Strong general performance (MMLU, MMLU-Pro, etc.), so one model can serve both product features and coding agents.

Limits

- 405B parameters mean high serving cost and latency unless you have a large GPU cluster.

- For strictly code benchmarks at a fixed compute budget, specialized models such as Qwen2.5-Coder-32B and Codestral 25.01 are more cost-efficient.

Use when you want a single open foundation model with strong coding and general reasoning, and you control your own GPU infrastructure.

5. DeepSeek-V2.5-1210 (and DeepSeek-V3)

DeepSeek-V2.5-1210 is an upgraded Mixture-of-Experts model that merges the chat and coder lines. The model card reports:

- LiveCodeBench (08.01–12.01): improved from 29.2% to 34.38%

- MATH-500: 74.8% → 82.8%

DeepSeek has since released DeepSeek-V3, a 671B-parameter MoE with 37B active per token, trained on 14.8T tokens. The performance is comparable to leading closed models on many reasoning and coding benchmarks, and public dashboards show V3 ahead of V2.5 on key tasks.

Strengths

- Open MoE model with solid LiveCodeBench results and good math performance for its size.

- Efficient active-parameter count vs total parameters.

Limits

- V2.5 is no longer the flagship; DeepSeek-V3 is now the reference model.

- Ecosystem is lighter than OpenAI / Google / Anthropic; teams must assemble their own IDE and agent integrations.

Use when you want a self-hosted MoE coder with open weights and are ready to move to DeepSeek-V3 as it matures.

6. Qwen2.5-Coder-32B-Instruct

Qwen2.5-Coder is Alibaba’s code-specific LLM family. The technical report and model card describe six sizes (0.5B to 32B) and continued pretraining on over 5.5T tokens of code-heavy data.

The official benchmarks for Qwen2.5-Coder-32B-Instruct list:

- HumanEval: 92.7%

- MBPP: 90.2%

- LiveCodeBench: 31.4%

- Aider Polyglot: 73.7%

- Spider: 85.1%

- CodeArena: 68.9%

Strengths

- Very strong HumanEval / MBPP / Spider results for an open model; often competitive with closed models in pure code tasks.

- Multiple parameter sizes make it adaptable to different hardware budgets.

Limits

- Less suited for broad general reasoning than a generalist like Llama 3.1 405B or DeepSeek-V3.

- Documentation and ecosystem are catching up in English-language tooling.

Use when you need a self-hosted, high-accuracy code model and can pair it with a general LLM for non-code tasks.

7. Mistral Codestral 25.01

Codestral 25.01 is Mistral’s updated code generation model. Mistral’s announcement and follow-up posts state that 25.01 uses a more efficient architecture and tokenizer and generates code roughly 2× faster than the base Codestral model.

Benchmark reports:

- HumanEval: 86.6%

- MBPP: 80.2%

- Spider: 66.5%

- RepoBench: 38.0%

- LiveCodeBench: 37.9%

Codestral 25.01 supports over 80 programming languages and a 256k token context window, and is optimized for low-latency, high-frequency tasks such as completion and FIM.

Strengths

- Very good RepoBench / LiveCodeBench scores for a mid-size open model.

- Designed for fast interactive use in IDEs and SaaS, with open weights and a 256k context.

Limits

- Absolute HumanEval / MBPP scores sit below Qwen2.5-Coder-32B, which is expected at this parameter class.

Use when you need a compact, fast open code model for completions and FIM at scale.

Head to head comparison

| Feature | GPT-5 / GPT-5-Codex | Claude 3.5 / 4.x + Claude Code | Gemini 2.5 Pro | Llama 3.1 405B Instruct | DeepSeek-V2.5-1210 / V3 | Qwen2.5-Coder-32B | Codestral 25.01 |

|---|---|---|---|---|---|---|---|

| Core task | Hosted general model with strong coding and agents | Hosted models plus repo-level coding VM | Hosted coding and reasoning model on GCP | Open generalist foundation with strong coding | Open MoE coder and chat model | Open code-specialized model | Open mid-size code model |

| Context | 128k (chat), up to 400k Pro / Codex | 200k-class (varies by tier) | Long-context, million-class across Gemini line | Up to 128k in many deployments | Tens of k, MoE scaling | 32B with typical 32k–128k contexts depending on host | 256k context |

| Code benchmarks (examples) | 74.9 SWE-bench, 88 Aider | ≈92 HumanEval, ≈91 MBPP, 49 SWE-bench (3.5); 4.x stronger but less published | 70.4 LiveCodeBench, 74 Aider, 63.8 SWE-bench | 89 HumanEval, ≈88.6 MBPP | 34.38 LiveCodeBench; V3 stronger on mixed benchmarks | 92.7 HumanEval, 90.2 MBPP, 31.4 LiveCodeBench, 73.7 Aider | 86.6 HumanEval, 80.2 MBPP, 38 RepoBench, 37.9 LiveCodeBench |

| Deployment | Closed API, OpenAI / Copilot stack | Closed API, Anthropic console, Claude Code | Closed API, Google AI Studio / Vertex AI | Open weights, self-hosted or cloud | Open weights, self-hosted; V3 via providers | Open weights, self-hosted or via providers | Open weights, available on multiple clouds |

| Integration path | ChatGPT, OpenAI API, Copilot | Claude app, Claude Code, SDKs | Gemini Apps, Vertex AI, GCP | Hugging Face, vLLM, cloud marketplaces | Hugging Face, vLLM, custom stacks | Hugging Face, commercial APIs, local runners | Azure, GCP, custom inference, IDE plugins |

| Best fit | Max SWE-bench / Aider performance in hosted setting | Repo-level agents and debugging quality | GCP-centric engineering and data + code | Single open foundation model | Open MoE experiments and Chinese ecosystem | Self-hosted high-accuracy code assistant | Fast open model for IDE and product integration |

What to use when?

- You want the strongest hosted repo-level solver: Use GPT-5 / GPT-5-Codex. Claude Sonnet 4.x is the closest competitor, but GPT-5 has the clearest SWE-bench Verified and Aider numbers today.

- You want a full coding agent over a VM and GitHub: Use Claude Sonnet + Claude Code for repo-aware workflows and long multi-step debugging sessions.

- You are standardized on Google Cloud: Use Gemini 2.5 Pro as the default coding model inside Vertex AI and AI Studio.

- You need a single open general foundation: Use Llama 3.1 405B Instruct when you want one open model for application logic, RAG, and code.

- You want the strongest open code specialist: Use Qwen2.5-Coder-32B-Instruct, and add a smaller general LLM for non-code tasks if needed.

- You want MoE-based open models: Use DeepSeek-V2.5-1210 now and plan for DeepSeek-V3 as you move to the latest upgrade.

- You are building IDEs or SaaS products and need a fast open code model: Use Codestral 25.01 for FIM, completion, and mid-size repo work with 256k context.

Editorial comments

GPT-5, Claude Sonnet 4.x, and Gemini 2.5 Pro now define the upper bound of hosted coding performance, especially on SWE-bench Verified and Aider Polyglot. At the same time, open models such as Llama 3.1 405B, Qwen2.5-Coder-32B, DeepSeek-V2.5/V3, and Codestral 25.01 show that it is realistic to run high-quality coding systems on your own infrastructure, with full control over weights and data paths.

For most software engineering teams, the practical answer is a portfolio: one or two hosted frontier models for the hardest multi-service refactors, plus one or two open models for internal tools, regulated code bases, and latency-sensitive IDE integrations.

References

- OpenAI – Introducing GPT-5 for developers (SWE-bench Verified, Aider Polyglot) (openai.com)

- Vellum, Runbear and other benchmark summaries for GPT-5 coding performance (vellum.ai)

- Anthropic – Claude 3.5 Sonnet and Claude 4 announcements (Anthropic)

- Kitemetric and other third-party Claude 3.5 Sonnet coding benchmark reviews (Kite Metric)

- Google – Gemini 2.5 Pro model page and Google / Datacamp benchmark posts (Google DeepMind)

- Meta – Llama 3.1 405B model card and analyses of HumanEval / MBPP scores (Hugging Face)

- DeepSeek – DeepSeek-V2.5-1210 model card and update notes; community coverage on V3 (Hugging Face)

- Alibaba – Qwen2.5-Coder technical report and Hugging Face model card (arXiv)

- Mistral – Codestral 25.01 announcement and benchmark summaries (Mistral AI)

The post Comparing the Top 7 Large Language Models LLMs/Systems for Coding in 2025 appeared first on MarkTechPost.