Large language models are now limited less by training and more by how fast and cheaply we can serve tokens under real traffic. That comes down to three implementation details: how the runtime batches requests, how it overlaps prefill and decode, and how it stores and reuses the KV cache. Different engines make different tradeoffs on these axes, which show up directly as differences in tokens per second, P50/P99 latency, and GPU memory usage.

This article compares six runtimes that show up repeatedly in production stacks:

- vLLM

- TensorRT LLM

- Hugging Face Text Generation Inference (TGI v3)

- LMDeploy

- SGLang

- DeepSpeed Inference / ZeRO Inference

1. vLLM

Design

vLLM is built around PagedAttention. Instead of storing each sequence’s KV cache in a large contiguous buffer, it partitions KV into fixed size blocks and uses an indirection layer so each sequence points to a list of blocks.

This gives:

- Very low KV fragmentation (reported <4% waste vs 60–80% in naïve allocators)

- High GPU utilization with continuous batching

- Native support for prefix sharing and KV reuse at block level

Recent versions add KV quantization (FP8) and integrate FlashAttention style kernels.

Performance

vLLM evaluation:

- vLLM achieves 14–24× higher throughput than Hugging Face Transformers and 2.2–3.5× higher than early TGI for LLaMA models on NVIDIA GPUs.

KV and memory behavior

- PagedAttention provides a KV layout that is both GPU friendly and fragmentation resistant.

- FP8 KV quantization reduces KV size and improves decode throughput when compute is not the bottleneck.

Where it fits

- Default high performance engine when you need a general LLM serving backend with good throughput, good TTFT, and hardware flexibility.

2. TensorRT LLM

Design

TensorRT LLM is a compilation based engine on top of NVIDIA TensorRT. It generates fused kernels per model and shape, and exposes an executor API used by frameworks such as Triton.

Its KV subsystem is explicit and feature rich:

- Paged KV cache

- Quantized KV cache (INT8, FP8, with some combinations still evolving)

- Circular buffer KV cache

- KV cache reuse, including offloading KV to CPU and reusing it across prompts to reduce TTFT

NVIDIA reports that CPU based KV reuse can reduce time to first token by up to 14× on H100 and even more on GH200 in specific scenarios.

Performance

TensorRT LLM is highly tunable, so results vary. Common patterns from public comparisons and vendor benchmarks:

- Very low single request latency on NVIDIA GPUs when engines are compiled for the exact model and configuration.

- At moderate concurrency, it can be tuned either for low TTFT or for high throughput; at very high concurrency, throughput optimized profiles push P99 up due to aggressive batching.

KV and memory behavior

- Paged KV plus quantized KV gives strong control over memory use and bandwidth.

- Executor and memory APIs let you design cache aware routing policies at the application layer.

Where it fits

- Latency critical workloads and NVIDIA only environments, where teams can invest in engine builds and per model tuning.

3. Hugging Face TGI v3

Design

Text Generation Inference (TGI) is a server focused stack with:

- Rust based HTTP and gRPC server

- Continuous batching, streaming, safety hooks

- Backends for PyTorch and TensorRT and tight Hugging Face Hub integration

TGI v3 adds a new long context pipeline:

- Chunked prefill for long inputs

- Prefix KV caching so long conversation histories are not recomputed on each request

Performance

For conventional prompts, recent third party work shows:

- vLLM often edges out TGI on raw tokens per second at high concurrency due to PagedAttention, but the difference is not huge on many setups.

- TGI v3 processes around 3× more tokens and is up to 13× faster than vLLM on long prompts, under a setup with very long histories and prefix caching enabled.

Latency profile:

- P50 for short and mid length prompts is similar to vLLM when both are tuned with continuous batching.

- For long chat histories, prefill dominates in naive pipelines; TGI v3’s reuse of earlier tokens gives a large win in TTFT and P50.

KV and memory behavior

- TGI uses KV caching with paged attention style kernels and reduces memory footprint through chunking of prefill and other runtime changes.

- It integrates quantization through bits and bytes and GPTQ and runs across several hardware backends.

Where it fits

- Production stacks already on Hugging Face, especially for chat style workloads with long histories where prefix caching gives large real world gains.

4. LMDeploy

Design

LMDeploy is a toolkit for compression and deployment from the InternLM ecosystem. It exposes two engines:

- TurboMind: high performance CUDA kernels for NVIDIA GPUs

- PyTorch engine: flexible fallback

Key runtime features:

- Persistent, continuous batching

- Blocked KV cache with a manager for allocation and reuse

- Dynamic split and fuse for attention blocks

- Tensor parallelism

- Weight only and KV quantization (including AWQ and online INT8 / INT4 KV quant)

LMDeploy delivers up to 1.8× higher request throughput than vLLM, attributing this to persistent batching, blocked KV and optimized kernels.

Performance

Evaluations show:

- For 4 bit Llama style models on A100, LMDeploy can reach higher tokens per second than vLLM under comparable latency constraints, especially at high concurrency.

- It also reports that 4 bit inference is about 2.4× faster than FP16 for supported models.

Latency:

- Single request TTFT is in the same ballpark as other optimized GPU engines when configured without extreme batch limits.

- Under heavy concurrency, persistent batching plus blocked KV let LMDeploy sustain high throughput without TTFT collapse.

KV and memory behavior

- Blocked KV cache trades contiguous per sequence buffers for a grid of KV chunks managed by the runtime, similar in spirit to vLLM’s PagedAttention but with a different internal layout.

- Support for weight and KV quantization targets large models on constrained GPUs.

Where it fits

- NVIDIA centric deployments that want maximum throughput and are comfortable using TurboMind and LMDeploy specific tooling.

5. SGLang

Design

SGLang is both:

- A DSL for building structured LLM programs such as agents, RAG workflows and tool pipelines

- A runtime that implements RadixAttention, a KV reuse mechanism that shares prefixes using a radix tree structure rather than simple block hashes.

RadixAttention:

- Stores KV for many requests in a prefix tree keyed by tokens

- Enables high KV hit rates when many calls share prefixes, such as few shot prompts, multi turn chat, or tool chains

Performance

Key Insights:

- SGLang achieves up to 6.4× higher throughput and up to 3.7× lower latency than baseline systems such as vLLM, LMQL and others on structured workloads.

- Improvements are largest when there is heavy prefix reuse, for example multi turn chat or evaluation workloads with repeated context.

Reported KV cache hit rates range from roughly 50% to 99%, and cache aware schedulers get close to the optimal hit rate on the measured benchmarks.

KV and memory behavior

- RadixAttention sits on top of paged attention style kernels and focuses on reuse rather than just allocation.

- SGLang integrates well with hierarchical context caching systems that move KV between GPU and CPU when sequences are long, although those systems are usually implemented as separate projects.

Where it fits

- Agentic systems, tool pipelines, and heavy RAG applications where many calls share large prompt prefixes and KV reuse matters at the application level.

6. DeepSpeed Inference / ZeRO Inference

Design

DeepSpeed provides two pieces relevant for inference:

- DeepSpeed Inference: optimized transformer kernels plus tensor and pipeline parallelism

- ZeRO Inference / ZeRO Offload: techniques that offload model weights, and in some setups KV cache, to CPU or NVMe to run very large models on limited GPU memory

ZeRO Inference focuses on:

- Keeping little or no model weights resident in GPU

- Streaming tensors from CPU or NVMe as needed

- Targeting throughput and model size rather than low latency

Performance

In the ZeRO Inference OPT 30B example on a single V100 32GB:

- Full CPU offload reaches about 43 tokens per second

- Full NVMe offload reaches about 30 tokens per second

- Both are 1.3–2.4× faster than partial offload configurations, because full offload enables larger batch sizes

These numbers are small compared to GPU resident LLM runtimes on A100 or H100, but they apply to a model that does not fit natively in 32GB.

A recent I/O characterization of DeepSpeed and FlexGen confirms that offload based systems are dominated by small 128 KiB reads and that I/O behavior becomes the main bottleneck.

KV and memory behavior

- Model weights and sometimes KV blocks are offloaded to CPU or SSD to fit models beyond GPU capacity.

- TTFT and P99 are high compared to pure GPU engines, but the tradeoff is the ability to run very large models that otherwise would not fit.

Where it fits

- Offline or batch inference, or low QPS services where model size matters more than latency and GPU count.

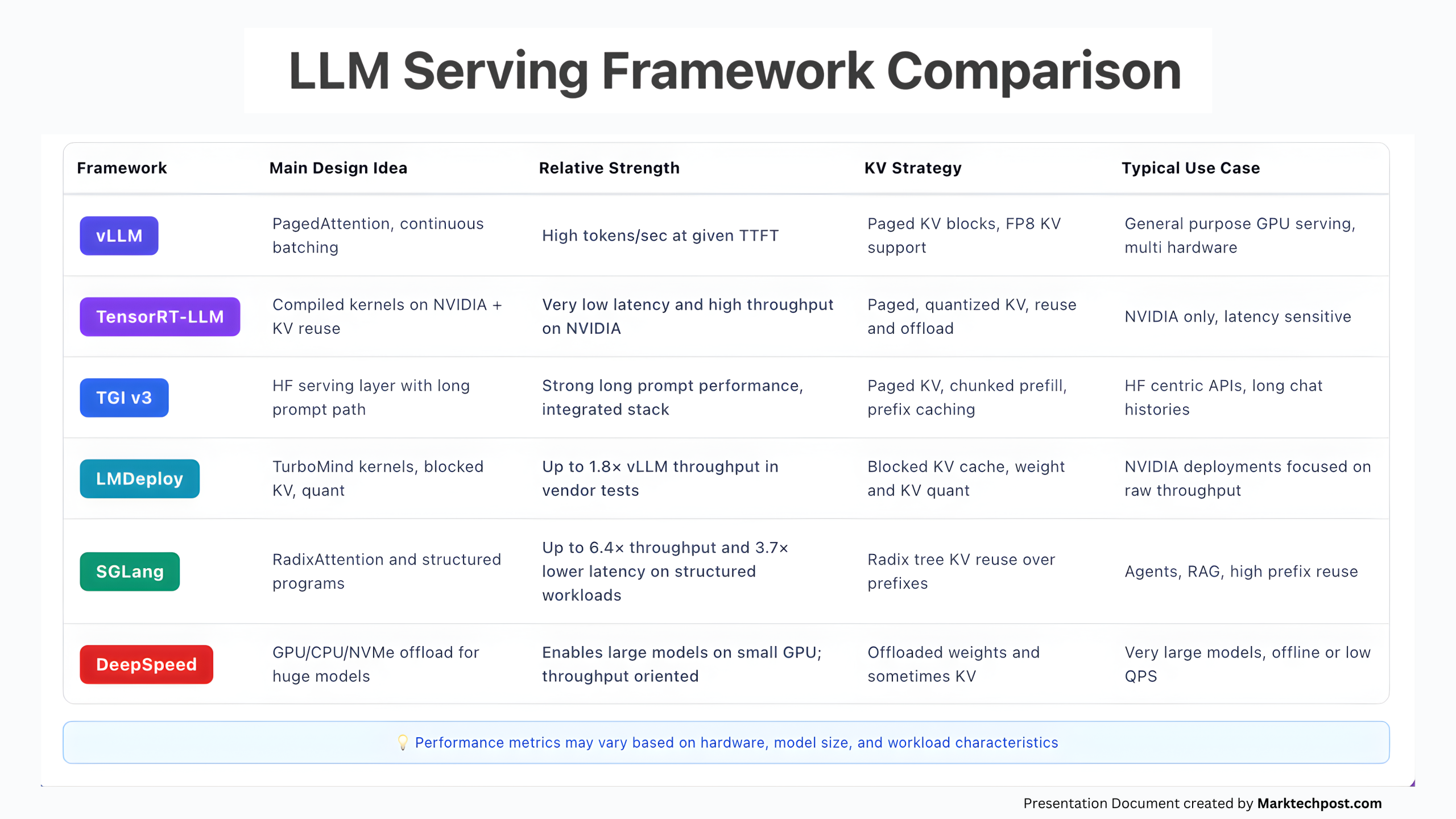

Comparison Tables

This table summarizes the main tradeoffs qualitatively:

| Runtime | Main design idea | Relative strength | KV strategy | Typical use case |

|---|---|---|---|---|

| vLLM | PagedAttention, continuous batching | High tokens per second at given TTFT | Paged KV blocks, FP8 KV support | General purpose GPU serving, multi hardware |

| TensorRT LLM | Compiled kernels on NVIDIA + KV reuse | Very low latency and high throughput on NVIDIA | Paged, quantized KV, reuse and offload | NVIDIA only, latency sensitive |

| TGI v3 | HF serving layer with long prompt path | Strong long prompt performance, integrated stack | Paged KV, chunked prefill, prefix caching | HF centric APIs, long chat histories |

| LMDeploy | TurboMind kernels, blocked KV, quant | Up to 1.8× vLLM throughput in vendor tests | Blocked KV cache, weight and KV quant | NVIDIA deployments focused on raw throughput |

| SGLang | RadixAttention and structured programs | Up to 6.4× throughput and 3.7× lower latency on structured workloads | Radix tree KV reuse over prefixes | Agents, RAG, high prefix reuse |

| DeepSpeed | GPU CPU NVMe offload for huge models | Enables large models on small GPU; throughput oriented | Offloaded weights and sometimes KV | Very large models, offline or low QPS |

Choosing a runtime in practice

For a production system, the choice tends to collapse to a few simple patterns:

- You want a strong default engine with minimal custom work: You can start with vLLM. It gives you good throughput, reasonable TTFT, and solid KV handling on common hardware.

- You are committed to NVIDIA and want fine grained control over latency and KV: You can use TensorRT LLM, likely behind Triton or TGI. Plan for model specific engine builds and tuning.

- Your stack is already on Hugging Face and you care about long chats: You can use TGI v3. Its long prompt pipeline and prefix caching are very effective for conversation style traffic.

- You want maximum throughput per GPU with quantized models: You can use LMDeploy with TurboMind and blocked KV, especially for 4 bit Llama family models.

- You are building agents, tool chains or heavy RAG systems: You can use SGLang and design prompts so that KV reuse via RadixAttention is high.

- You must run very large models on limited GPUs: You can use DeepSpeed Inference / ZeRO Inference, accept higher latency, and treat the GPU as a throughput engine with SSD in the loop.

Overall, all these engines are converging on the same idea: KV cache is the real bottleneck resource. The winners are the runtimes that treat KV as a first class data structure to be paged, quantized, reused and offloaded, not just a big tensor slapped into GPU memory.

The post Comparing the Top 6 Inference Runtimes for LLM Serving in 2025 appeared first on MarkTechPost.