Table of contents

Reliable multi-agent systems are mostly a memory design problem. Once agents call tools, collaborate, and run long workflows, you need explicit mechanisms for what gets stored, how it is retrieved, and how the system behaves when memory is wrong or missing.

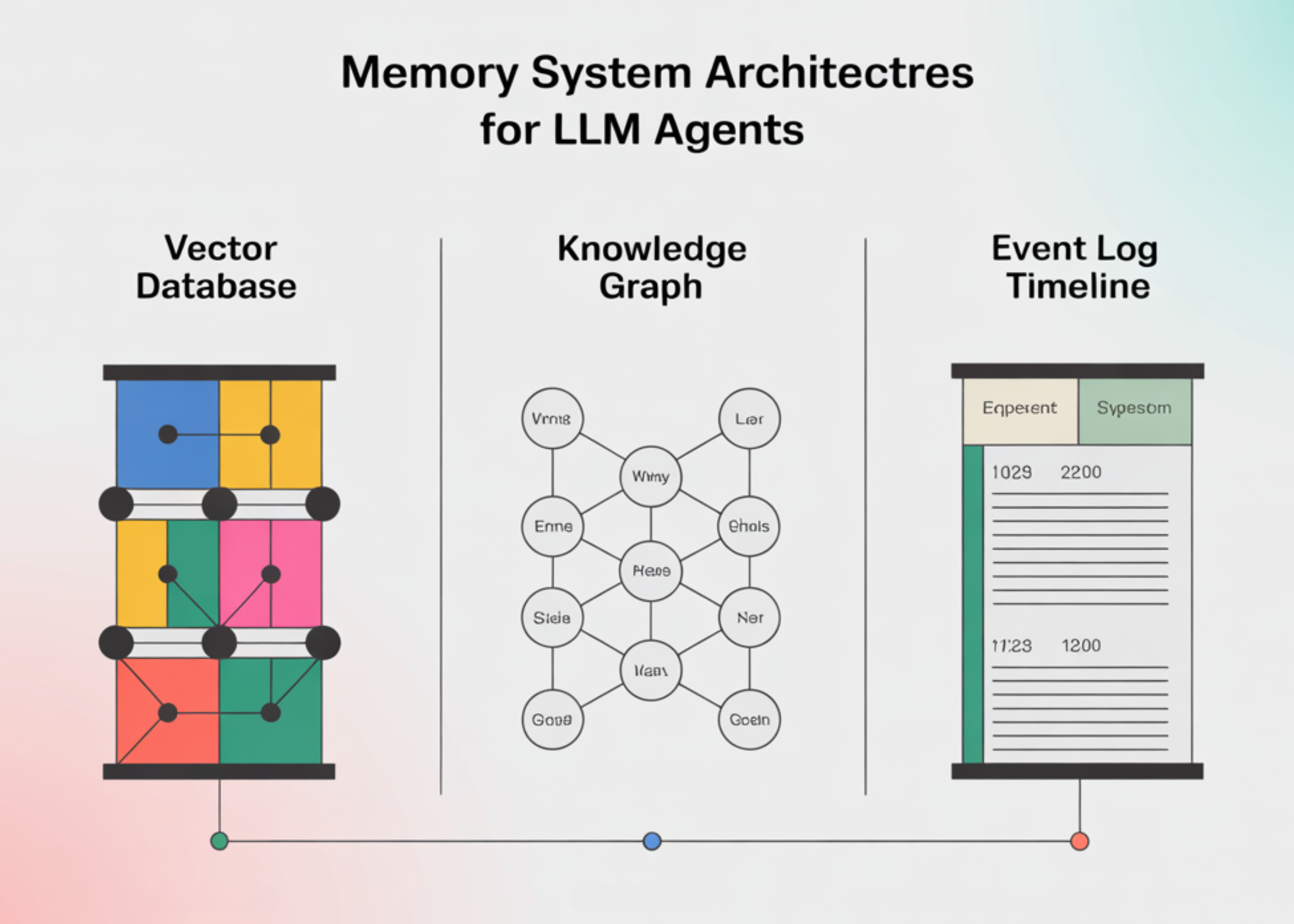

This article compares 6 memory system patterns commonly used in agent stacks, grouped into 3 families:

- Vector memory

- Graph memory

- Event / execution logs

We focus on retrieval latency, hit rate, and failure modes in multi-agent planning.

High-Level Comparison

| Family | System pattern | Data model | Strengths | Main weaknesses |

|---|---|---|---|---|

| Vector | Plain vector RAG | Embedding vectors | Simple, fast ANN retrieval, widely supported | Loses temporal / structural context, semantic drift |

| Vector | Tiered vector (MemGPT-style virtual context) | Working set + vector archive | Better reuse of important info, bounded context size | Paging policy errors, per-agent divergence |

| Graph | Temporal KG memory (Zep / Graphiti) | Temporal knowledge graph | Strong temporal, cross-session reasoning, shared view | Requires schema + update pipeline, can have stale edges |

| Graph | Knowledge-graph RAG (GraphRAG) | KG + hierarchical communities | Multi-doc, multi-hop questions, global summaries | Graph construction and summarization bias, traceability overhead |

| Event / Logs | Execution logs / checkpoints (ALAS, LangGraph) | Ordered versioned log | Ground truth of actions, supports replay and repair | Log bloat, missing instrumentation, side-effect-safe replay required |

| Event / Logs | Episodic long-term memory | Episodes + metadata | Long-horizon recall, pattern reuse across tasks | Episode boundary errors, consolidation errors, cross-agent misalign |

Next, we go system family by system family.

1. Vector Memory Systems

1.1 Plain Vector RAG

What it is?

The default pattern in most RAG and agent frameworks:

- Encode text fragments (messages, tool outputs, documents) using an embedding model.

- Store vectors in an ANN index (FAISS, HNSW, ScaNN, etc.).

- At query time, embed the query and retrieve top-k nearest neighbors, optionally rerank.

This is the ‘vector store memory’ exposed by typical LLM orchestration libraries.

Latency profile

Approximate nearest-neighbor indexes are designed for sublinear scaling with corpus size:

- Graph-based ANN structures like HNSW typically show empirically near-logarithmic latency growth vs corpus size for fixed recall targets.

- On a single node with tuned parameters, retrieving from up to millions of items is usually low tens of milliseconds per query, plus any reranking cost.

Main cost components:

- ANN search in the vector index.

- Additional reranking (e.g., cross-encoder) if used.

- LLM attention cost over concatenated retrieved chunks.

Hit-rate behavior

Hit rate is high when:

- The query is local (‘what did we just talk about’), or

- The information lives in a small number of chunks with embeddings aligned to the query model.

Vector RAG performs significantly worse on:

- Temporal queries (‘what did the user decide last week’).

- Cross-session reasoning and long histories.

- Multi-hop questions requiring explicit relational paths.

Benchmarks such as Deep Memory Retrieval (DMR) and LongMemEval were introduced precisely because naive vector RAG degrades on long-horizon and temporal tasks.

Failure modes in multi-agent planning

- Lost constraints: top-k retrieval misses a critical global constraint (budget cap, compliance rule), so a planner generates invalid tool calls.

- Semantic drift: approximate neighbors match on topic but differ in key identifiers (region, environment, user ID), leading to wrong arguments.

- Context dilution: too many partially relevant chunks are concatenated; the model underweights the important part, especially in long contexts.

When it is fine

- Single-agent or short-horizon tasks.

- Q&A over small to medium corpora.

- As a first-line semantic index over logs, docs, and episodes, not as the final authority.

1.2 Tiered Vector Memory (MemGPT-Style Virtual Context)

What it is?

MemGPT introduces a virtual-memory abstraction for LLMs: a small working context plus larger external archives, managed by the model using tool calls (e.g., ‘swap in this memory’, ‘archive that section’). The model decides what to keep in the active context and what to fetch from long-term memory.

Architecture

- Active context: the tokens currently present in the LLM input (analogous to RAM).

- Archive / external memory: larger storage, often backed by a vector DB and object store.

- The LLM uses specialized functions to:

- Load archived content into context.

- Evict parts of the current context to the archive.

Latency profile

Two regimes:

- Within active context: retrieval is effectively free externally; attention cost only.

- Archive accesses: similar to plain vector RAG, but often targeted:

- Search space is narrowed by task, topic, or session ID.

- The controller can cache “hot” entries.

Overall, you still pay vector search and serialization costs when paging, but you avoid sending large, irrelevant context to the model at each step.

Hit-rate behavior

Improvement relative to plain vector RAG:

- Frequently accessed items are kept in the working set, so they do not depend on ANN retrieval every step.

- Rare or old items still suffer from vector-search limitations.

The core new error surface is paging policy rather than pure similarity.

Failure modes in multi-agent planning

- Paging errors: the controller archives something that is needed later, or fails to recall it, causing latent constraint loss.

- Per-agent divergence: if each agent manages its own working set over a shared archive, agents may hold different local views of the same global state.

- Debugging complexity: failures depend on both model reasoning and memory management decisions, which must be inspected together.

When it is useful

- Long conversations and workflows where naive context growth is not viable.

- Systems where you want vector RAG semantics but bounded context usage.

- Scenarios where you can invest in designing / tuning paging policies.

2. Graph Memory Systems

2.1 Temporal Knowledge Graph Memory (Zep / Graphiti)

What it is?

Zep positions itself as a memory layer for AI agents implemented as a temporal knowledge graph (Graphiti). It integrates:

- Conversational history.

- Structured business data.

- Temporal attributes and versioning.

Zep evaluates this architecture on DMR and LongMemEval, comparing against MemGPT and long-context baselines.

Reported results include:

- 94.8% vs 93.4% accuracy over a MemGPT baseline on DMR.

- Up to 18.5% higher accuracy and about 90% lower response latency than certain baselines on LongMemEval for complex temporal reasoning.

These numbers underline the benefit of explicit temporal structure over pure vector recall on long-term tasks.

Architecture

Core components:

- Nodes: entities (users, tickets, resources), events (messages, tool calls).

- Edges: relations (created, depends_on, updated_by, discussed_in).

- Temporal indexing: validity intervals and timestamps on nodes/edges.

- APIs for:

- Writing new events / facts into the KG.

- Querying along entity and temporal dimensions.

The KG can coexist with a vector index for semantic entry points.

Latency profile

Graph queries are typically bounded by small traversal depths:

- For questions like “latest configuration that passed checks,” the system:

- Locates the relevant entity node.

- Traverses outgoing edges with temporal filters.

- Complexity scales with the size of the local neighborhood, not the full graph.

In practice, Zep reports order-of-magnitude latency benefits vs baselines that either scan long contexts or rely on less structured retrieval.

Hit-rate behavior

Graph memory excels when:

- Queries are entity-centric and temporal.

- You need cross-session consistency, e.g., “what did this user previously request,” “what state was this resource in at time T”.

- Multi-hop reasoning is required (“if ticket A depends on B and B failed after policy P changed, what is the likely cause?”).

Hit rate is limited by graph coverage: missing edges or incorrect timestamps directly reduce recall.

Failure modes in multi-agent planning

- Stale edges / lagging updates: if real systems change but graph updates are delayed, plans operate on incorrect world models.

- Schema drift: evolving the KG schema without synchronized changes in retrieval prompts or planners yields subtle errors.

- Access control partitions: multi-tenant scenarios can yield partial views per agent; planners must be aware of visibility constraints.

When it is useful

- Multi-agent systems coordinating on shared entities (tickets, users, inventories).

- Long-running tasks where temporal ordering is critical.

- Environments where you can maintain ETL / streaming pipelines into the KG.

2.2 Knowledge-Graph RAG (GraphRAG)

What it is?

GraphRAG is a retrieval-augmented generation pipeline from Microsoft that builds an explicit knowledge graph over a corpus and performs hierarchical community detection (e.g., Hierarchical Leiden) to organize the graph. It stores summaries per community and uses them at query time.

Pipeline:

- Extract entities and relations from source documents.

- Build the KG.

- Run community detection and build a multi-level hierarchy.

- Generate summaries for communities and key nodes.

- At query time:

- Identify relevant communities (via keywords, embeddings, or graph heuristics).

- Retrieve summaries and supporting nodes.

- Pass them to the LLM.

Latency profile

- Indexing is heavier than vanilla RAG (graph construction, clustering, summarization).

- Query-time latency can be competitive or better for large corpora, because:

- You retrieve a small number of summaries.

- You avoid constructing extremely long contexts from many raw chunks.

Latency mostly depends on:

- Community search (often vector search over summaries).

- Local graph traversal inside selected communities.

Hit-rate behavior

GraphRAG tends to outperform plain vector RAG when:

- Queries are multi-document and multi-hop.

- You need global structure, e.g., “how did this design evolve,” “what chain of incidents led to this outage.”

- You want answers that integrate evidence from many documents.

The hit rate depends on graph quality and community structure: if entity extraction misses relations, they simply do not exist in the graph.

Failure modes

- Graph construction bias: extraction errors or missing edges lead to systematic blind spots.

- Over-summarization: community summaries may drop rare but important details.

- Traceability cost: tracing an answer back from summaries to raw evidence adds complexity, important in regulated or safety-critical settings.

When it is useful

- Large knowledge bases and documentation sets.

- Systems where agents must answer design, policy, or root-cause questions that span many documents.

- Scenarios where you can afford the one-time indexing and maintenance cost.

3. Event and Execution Log Systems

3.1 Execution Logs and Checkpoints (ALAS, LangGraph)

What they are?

These systems treat ‘what the agents did‘ as a first-class data structure.

- ALAS: a transactional multi-agent framework that maintains a versioned execution log plus:

- Validator isolation: a separate LLM checks plans/results with its own context.

- Localized Cascading Repair: only a minimal region of the log is edited when failures occur.

- LangGraph: exposes thread-scoped checkpoints of an agent graph (messages, tool outputs, node states) that can be persisted, resumed, and branched.

In both cases, the log / checkpoints are the ground truth for:

- Actions taken.

- Inputs and outputs.

- Control-flow decisions.

Latency profile

- For normal forward execution:

- Reading the tail of the log or a recent checkpoint is O(1) and small.

- Latency mostly comes from LLM inference and tool calls, not log access.

- For analytics / global queries:

- You need secondary indexes or offline processing; raw scanning is O(n).

Hit-rate behavior

For questions like ‘what happened,’ ‘which tools were called with which arguments,’ and ‘what was the state before this failure,’ hit rate is effectively 100%, assuming:

- All relevant actions are instrumented.

- Log persistence and retention are correctly configured.

Logs do not provide semantic generalization by themselves; you layer vector or graph indices on top for semantics across executions.

Failure modes

- Log bloat: high-volume systems generate large logs; improper retention or compaction can silently drop history.

- Partial instrumentation: missing tool or agent traces yield blind spots in replay and debugging.

- Unsafe replay: naively re-running log steps can re-trigger external side effects (payments, emails) unless idempotency keys and compensation handlers exist.

ALAS explicitly tackles some of these via transactional semantics, idempotency, and localized repair.

When they are essential?

- Any system where you care about observability, auditing, and debuggability.

- Multi-agent workflows with non-trivial failure semantics.

- Scenarios where you want automated repair or partial re-planning rather than full restart.

3.2 Episodic Long-Term Memory

What it is?

Episodic memory structures store episodes: cohesive segments of interaction or work, each with:

- Task description and initial conditions.

- Relevant context.

- Sequence of actions (often references into the execution log).

- Outcomes and metrics.

Episodes are indexed with:

- Metadata (time windows, participants, tools).

- Embeddings (for similarity search).

- Optional summaries.

Some systems periodically distill recurring patterns into higher-level knowledge or use episodes to fine-tune specialized models.

Latency profile

Episodic retrieval is typically two-stage:

- Identify relevant episodes via metadata filters and/or vector search.

- Retrieve content within selected episodes (sub-search or direct log references).

Latency is higher than a single flat vector search on small data, but scales better as lifetime history grows, because you avoid searching over all individual events for every query.

Hit-rate behavior

Episodic memory improves hit rate for:

- Long-horizon tasks: “have we run a similar migration before?”, “how did this kind of incident resolve in the past?”

- Pattern reuse: retrieving prior workflows plus outcomes, not just facts.

Hit rate still depends on episode boundaries and index quality.

Failure modes

- Episode boundary errors: too coarse (episodes that mix unrelated tasks) or too fine (episodes that cut mid-task).

- Consolidation mistakes: wrong abstractions during distillation propagate bias into parametric models or global policies.

- Multi-agent misalignment: per-agent episodes instead of per-task episodes make cross-agent reasoning harder.

When it is useful?

- Long-lived agents and workflows spanning weeks or months.

- Systems where “similar past cases” are more useful than raw facts.

- Training / adaptation loops where episodes can feed back into model updates.

Key Takeaways

- Memory is a systems problem, not a prompt trick: Reliable multi-agent setups need explicit design around what is stored, how it is retrieved, and how the system reacts when memory is stale, missing, or wrong.

- Vector memory is fast but structurally weak: Plain and tiered vector stores give low-latency, sublinear retrieval, but struggle with temporal reasoning, cross-session state, and multi-hop dependencies, making them unreliable as the sole memory backbone in planning workflows.

- Graph memory fixes temporal and relational blind spots: Temporal KGs (e.g., Zep/Graphiti) and GraphRAG-style knowledge graphs improve hit rate and latency on entity-centric, temporal, and multi-document queries by encoding entities, relations, and time explicitly.

- Event logs and checkpoints are the ground truth: ALAS-style execution logs and LangGraph-style checkpoints provide the authoritative record of what agents actually did, enabling replay, localized repair, and real observability in production systems.

- Robust systems compose multiple memory layers: Practical agent architectures combine vector, graph, and event/episodic memory, with clear roles and known failure modes for each, instead of relying on a single ‘magic’ memory mechanism.

References:

- MemGPT (virtual context / tiered vector memory)

- Zep / Graphiti (temporal knowledge graph memory, DMR, LongMemEval)

- GraphRAG (knowledge-graph RAG, hierarchical communities)

- ALAS (transactional / disruption-aware multi-agent planning, execution logs)

- LangGraph (checkpoints / memory, thread-scoped state)

- Supplemental GraphRAG + temporal KG context

The post Comparing Memory Systems for LLM Agents: Vector, Graph, and Event Logs appeared first on MarkTechPost.