Building robust AI agents differs fundamentally from traditional software development, as it centers on probabilistic model behavior rather than deterministic code execution. This guide provides a neutral overview of methodologies for designing AI agents that are both reliable and adaptable, with an emphasis on creating clear boundaries, effective behaviors, and safe interactions.

What Is Agentic Design?

Agentic design refers to constructing AI systems capable of independent action within defined parameters. Unlike conventional coding, which specifies exact outcomes for inputs, agentic systems require designers to articulate desirable behaviors and trust the model to navigate specifics.

Variability in AI Responses

Traditional software outputs remain constant for identical inputs. In contrast, agentic systems—based on probabilistic models—produce varied yet contextually appropriate responses each time. This makes effective prompt and guideline design critical for both human-likeness and safety.

In an agentic system, a request like “Can you help me reset my password?” might elicit different yet appropriate replies such as “Of course! Please tell me your username,” “Absolutely, let’s get started—what’s your email address?” or “I can assist with that. Do you remember your account ID?”. This variability is purposeful, designed to enhance user experience by mimicking the nuance and flexibility of human dialogue. At the same time, this unpredictability requires thoughtful guidelines and safeguards so the system responds safely and consistently across scenarios

Why Clear Instructions Matter

Language models interpret instructions rather than execute them literally. Vague guidance such as:

agent.create_guideline(

condition="User expresses frustration",

action="Try to make them happy"

)can lead to unpredictable or unsafe behavior, like unintended offers or promises. Instead, instructions should be concrete and action-focused:

Instead, be specific and safe:

agent.create_guideline(

condition="User is upset by a delayed delivery",

action="Acknowledge the delay, apologize, and provide a status update"

)

This approach ensures the model’s actions align with organizational policy and user expectations.

Building Compliance: Layers of Control

LLMs can’t be fully “controlled,” but you can still guide and constrain their behavior effectively.

Layer 1: Guidelines

Use guidelines to define and shape normal behavior.

await agent.create_guideline(

condition="Customer asks about topics outside your scope",

action="Politely decline and redirect to what you can help with"

)Layer 2: Canned Responses

For high-risk situations (such as policy or medical advice), use pre-approved canned responses to ensure consistency and safety.

await agent.create_canned_response(

template="I can help with account questions, but for policy details I'll connect you to a specialist."

)This layered approach minimizes risk and ensures the agent never improvises in sensitive situations.

Tool Calling: When Agents Take Action

When AI agents take action using tools such as APIs or functions, the process involves more complexity than simply executing a command. For example, if a user says, “Schedule a meeting with Sarah for next week,” the agent must interpret several unclear elements: Which Sarah is being referred to? What specific day and time within “next week” should the meeting be scheduled? And on which calendar?

This illustrates the Parameter Guessing Problem, where the agent attempts to infer missing details that weren’t explicitly provided. To address this, tools should be designed with clear purpose descriptions, parameter hints, and contextual examples to reduce ambiguity. Additionally, tool names should be intuitive and parameter types consistent, helping the agent reliably select and populate inputs. Well-structured tools improve accuracy, reduce errors, and make the interactions smoother and more predictable for both the agent and the user.

This thoughtful tool design practice is essential for effective, safe agent functionality in real-world applications.When AI agents perform tasks through tools such as APIs or functions, the complexity is often higher than it initially appears.

Agent Design Is Iterative

Unlike static software, agent behavior in agentic systems is not fixed; it matures over time through a continuous cycle of observation, evaluation, and refinement. The process typically begins with implementing straightforward, high-frequency user scenarios—those “happy path” interactions where the agent’s responses can be easily anticipated and validated. Once deployed in a safe testing environment, the agent’s behavior is closely monitored for unexpected answers, user confusion, or any breaches of policy guidelines.

As issues are observed, the agent is systematically improved by introducing targeted rules or refining existing logic to address problematic cases. For example, if users repeatedly decline an upsell offer but the agent continues to bring it up, a focused rule can be added to prevent this behavior within the same session. Through this deliberate, incremental tuning, the agent gradually evolves from a basic prototype into a sophisticated conversational system that is responsive, reliable, and well-aligned with both user expectations and operational constraints.

Writing Effective Guidelines

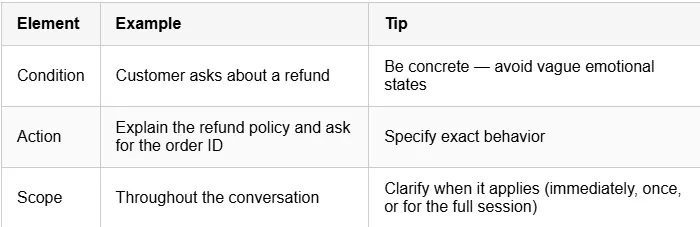

Each guideline has three key parts:

Example:

await agent.create_guideline(

condition="Customer requests a specific appointment time that's unavailable",

action="Offer the three closest available slots as alternatives",

tools=[get_available_slots]

)Structured Conversations: Journeys

For complex tasks such as booking appointments, onboarding, or troubleshooting, simple guidelines alone are often insufficient. This is where Journeys become essential. Journeys provide a framework to design structured, multi-step conversational flows that guide the user through a process smoothly while maintaining a natural dialogue.

For example, a booking flow can be initiated by creating a journey with a clear title and conditions defining when it applies, such as when a customer wants to schedule an appointment. The journey then progresses through states—first asking the customer what type of service they need, then checking availability using an appropriate tool, and finally offering available time slots. This structured approach balances flexibility and control, enabling the agent to handle complex interactions efficiently without losing the conversational feel.

Example: Booking Flow

booking_journey = await agent.create_journey(

title="Book Appointment",

conditions=["Customer wants to schedule an appointment"],

description="Guide customer through the booking process"

)

t1 = await booking_journey.initial_state.transition_to(

chat_state="Ask what type of service they need"

)

t2 = await t1.target.transition_to(

tool_state=check_availability_for_service

)

t3 = await t2.target.transition_to(

chat_state="Offer available time slots"

)Balancing Flexibility and Predictability

Balancing flexibility and predictability is essential when designing an AI agent. The agent should feel natural and conversational, rather than overly scripted, but it must still operate within safe and consistent boundaries.

If instructions are too rigid—for example, telling the agent to “Say exactly: ‘Our premium plan is $99/month‘”—the interaction can feel mechanical and unnatural. On the other hand, instructions that are too vague, such as “Help them understand our pricing“, can lead to unpredictable or inconsistent responses.

A balanced approach provides clear direction while allowing the agent some adaptability, for example: “Explain our pricing tiers clearly, highlight the value, and ask about the customer’s needs to recommend the best fit.” This ensures the agent remains both reliable and engaging in its interactions.

Designing for Real Conversations

Designing for real conversations requires recognizing that, unlike web forms, conversations are non-linear. Users may change their minds, skip steps, or move the discussion in unexpected directions. To handle this effectively, there are several key principles to follow.

- Context preservation ensures the agent keeps track of information already provided so it can respond appropriately.

- Progressive disclosure means revealing options or information gradually, rather than overwhelming the user with everything at once.

- Recovery mechanisms allow the agent to manage misunderstandings or deviations gracefully, for example by rephrasing a response or gently redirecting the conversation for clarity.

This approach helps create interactions that feel natural, flexible, and user-friendly.

Effective agentic design means starting with core features, focusing on main tasks before tackling rare cases. It involves careful monitoring to spot any issues in the agent’s behavior. Improvements should be based on real observations, adding clear rules to guide better responses. It’s important to balance clear boundaries that keep the agent safe while allowing natural, flexible conversation. For complex tasks, use structured flows called journeys to guide multi-step interactions. Finally, be transparent about what the agent can do and its limits to set proper expectations. This simple process helps create reliable, user-friendly AI agents.

The post Agentic Design Methodology: How to Build Reliable and Human-Like AI Agents using Parlant appeared first on MarkTechPost.