As AI agents evolve beyond simple chatbots, new design patterns have emerged to make them more capable, adaptable, and intelligent. These agentic design patterns define how agents think, act, and collaborate to solve complex problems in real-world settings. Whether it’s reasoning through tasks, writing and executing code, connecting to external tools, or even reflecting on their own outputs, each pattern represents a distinct approach to building smarter, more autonomous systems. Here are five of the most popular agentic design patterns every AI engineer should know.

ReAct Agent

A ReAct agent is an AI agent built on the “reasoning and acting” (ReAct) framework, which combines step-by-step thinking with the ability to use external tools. Instead of following fixed rules, it thinks through problems, takes actions like searching or running code, observes the results, and then decides what to do next.

The ReAct framework works much like how humans solve problems — by thinking, acting, and adjusting along the way. For example, imagine planning dinner: you start by thinking, “What do I have at home?” (reasoning), then check your fridge (action). Seeing only vegetables (observation), you adjust your plan — “I’ll make pasta with vegetables.” In the same way, ReAct agents alternate between thoughts, actions, and observations to handle complex tasks and make better decisions.

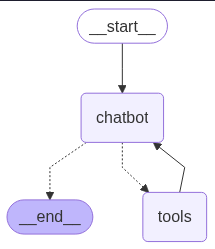

The image below illustrates the basic architecture of a ReAct Agent. The agent has access to various tools that it can use when required. It can independently reason, decide whether to invoke a tool, and re-run actions after making adjustments based on new observations. The dotted lines represent conditional paths—showing that the agent may choose to use a tool node only when it deems it necessary.

CodeAct Agent

A CodeAct Agent is an AI system designed to write, run, and refine code based on natural language instructions. Instead of just generating text, it can actually execute code, analyze the results, and adjust its approach — allowing it to solve complex, multi-step problems efficiently.

At its core, CodeAct enables an AI assistant to:

- Generate code from natural language input

- Execute that code in a safe, controlled environment

- Review the execution results

- Improve its response based on what it learns

The framework includes key components like a code execution environment, workflow definition, prompt engineering, and memory management, all working together to ensure the agent can perform real tasks reliably.

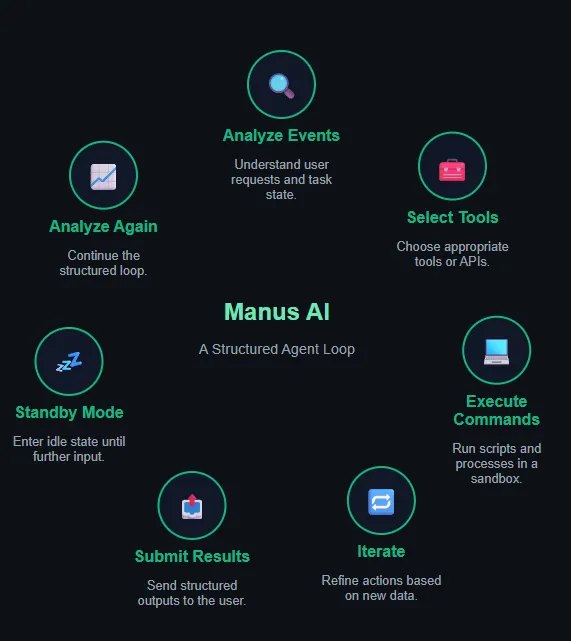

A good example is Manus AI, which uses a structured agent loop to process tasks step by step. It first analyzes the user’s request, selects the right tools or APIs, executes commands in a secure Linux sandbox, and iterates based on feedback until the job is done. Finally, it submits results to the user and enters standby mode, waiting for the next instruction.

Self-Reflection

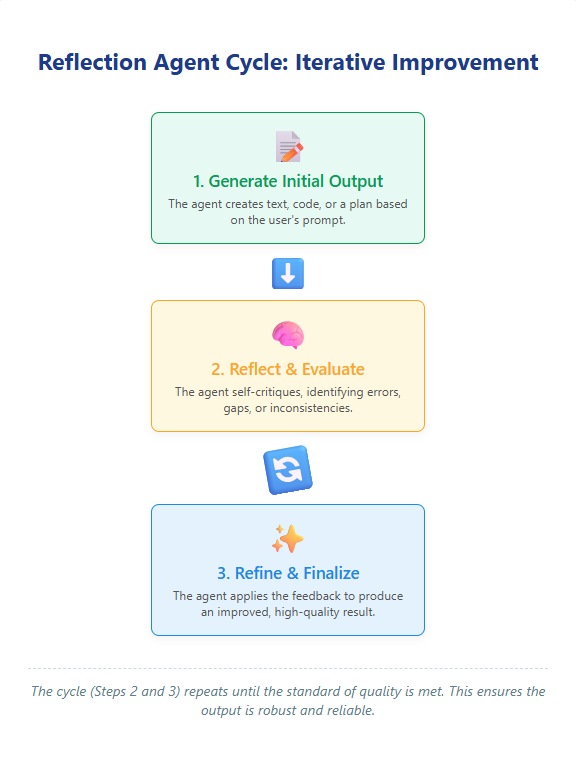

A Reflection Agent is an AI that can step back and evaluate its own work, identify mistakes, and improve through trial and error—similar to how humans learn from feedback.

This type of agent operates in a cyclical process: it first generates an initial output, such as text or code, based on a user’s prompt. Next, it reflects on that output, spotting errors, inconsistencies, or areas for improvement, often applying expert-like reasoning. Finally, it refines the output by incorporating its own feedback, repeating this cycle until the result reaches a high-quality standard.

Reflection Agents are especially useful for tasks that benefit from self-evaluation and iterative improvement, making them more reliable and adaptable than agents that generate content in a single pass.

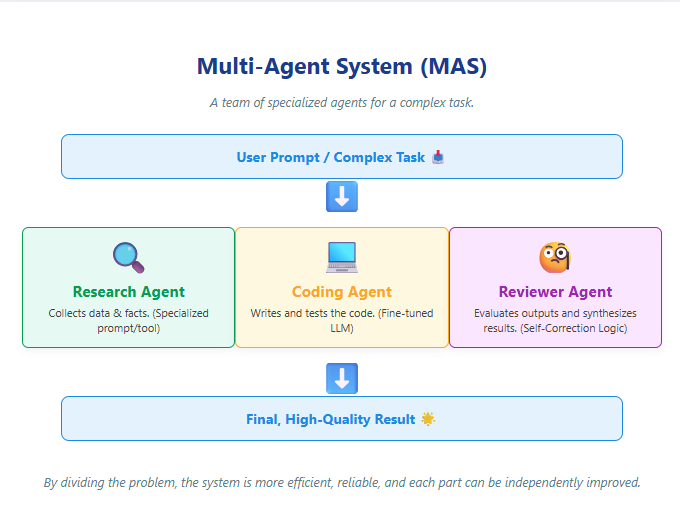

Multi-Agent Workflow

A Multi-Agent System uses a team of specialized agents instead of relying on a single agent to handle everything. Each agent focuses on a specific task, leveraging its strengths to achieve better overall results.

This approach offers several advantages: focused agents are more likely to succeed on their specific tasks than a single agent managing many tools; separate prompts and instructions can be tailored for each agent, even allowing the use of fine-tuned LLMs; and each agent can be evaluated and improved independently without affecting the broader system. By dividing complex problems into smaller, manageable units, multi-agent designs make large workflows more efficient, flexible, and reliable.

The above image visualizes a Multi-Agent System (MAS), illustrating how a single user prompt is decomposed into specialized tasks handled in parallel by three distinct agents (Research, Coding, and Reviewer) before being synthesized into a final, high-quality output.

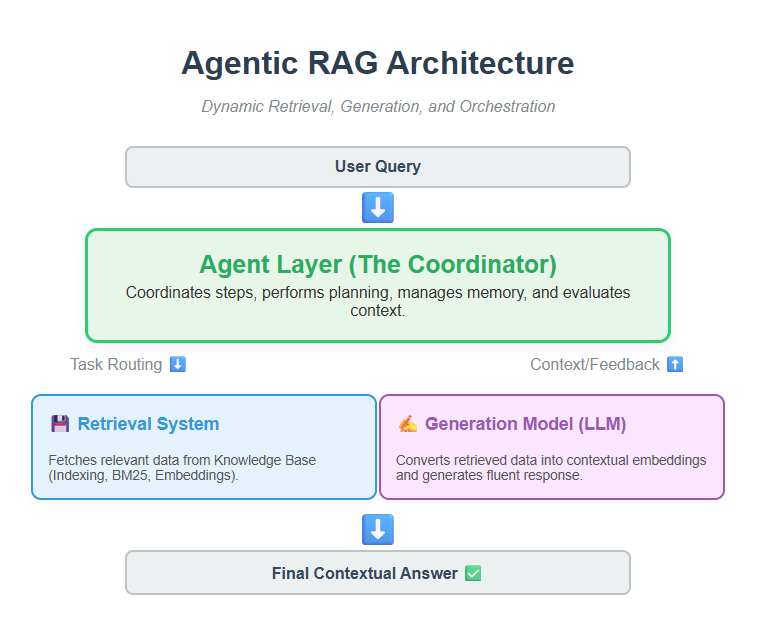

Agentic RAG

Agentic RAG agents take information retrieval a step further by actively searching for relevant data, evaluating it, generating well-informed responses, and remembering what they’ve learned for future use. Unlike traditional Native RAG, which relies on static retrieval and generation processes, Agentic RAG employs autonomous agents to dynamically manage and improve both retrieval and generation.

The architecture consists of three main components.

- The Retrieval System fetches relevant information from a knowledge base using techniques like indexing, query processing, and algorithms such as BM25 or dense embeddings.

- The Generation Model, typically a fine-tuned LLM, converts the retrieved data into contextual embeddings, focuses on key information using attention mechanisms, and generates coherent, fluent responses.

- The Agent Layer coordinates the retrieval and generation steps, making the process dynamic and context-aware while enabling the agent to remember and leverage past information.

Together, these components allow Agentic RAG to deliver smarter, more contextual answers than traditional RAG systems.

The post 5 Most Popular Agentic AI Design Patterns Every AI Engineer Should Know appeared first on MarkTechPost.